Visualizing Experiments

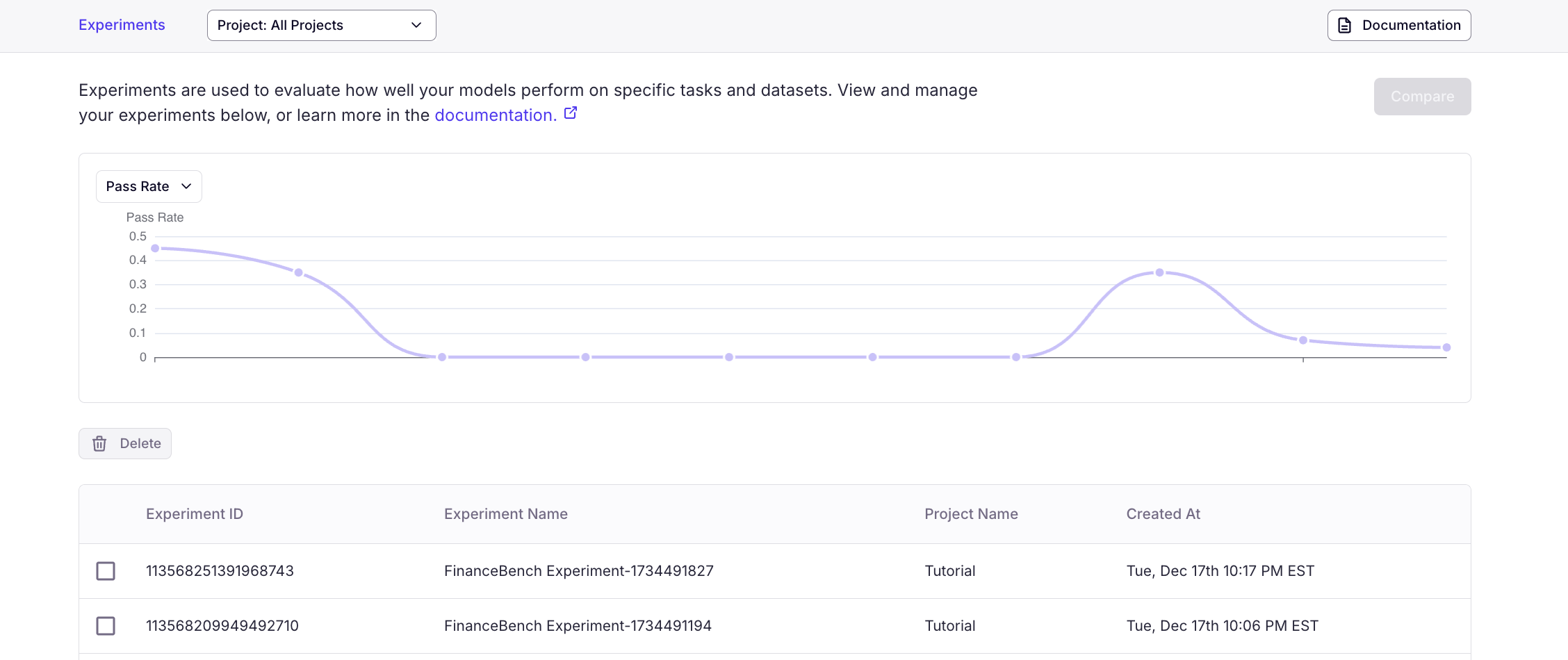

You can visualize experiments in many ways. By default, the Experiments view displays the accuracy, mean score and evaluation metrics for different experiments over time.

Per-metric Performance

When a user selects a project, we display experiment performance for each evaluation metric separately. See the below where answer-relevance and fuzzy-match are plotted for different experiments.

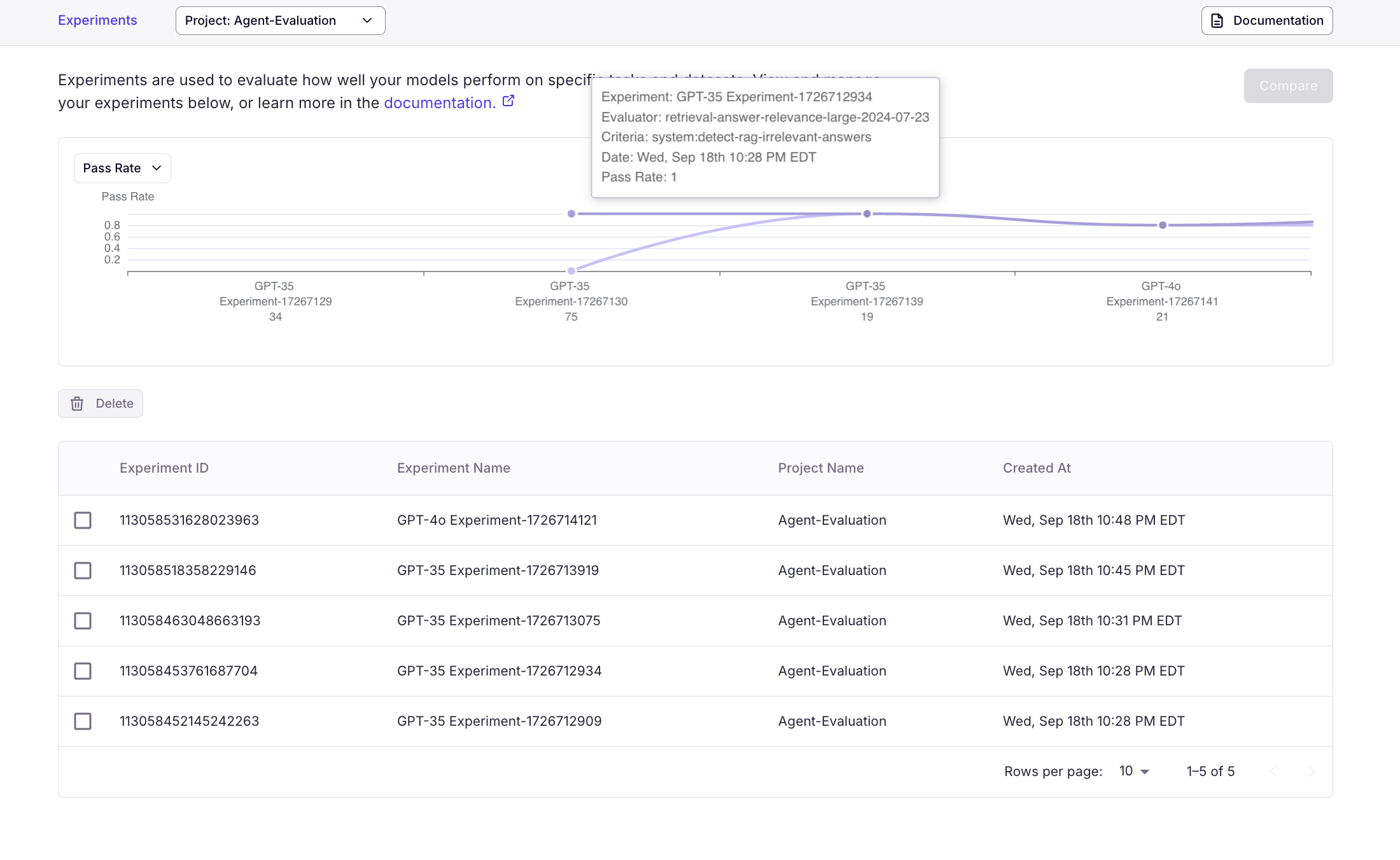

Per-model Performance

In the default view of Experiments, we display the performance plot for each project in the same chart. You can configure different projects for different models. For example, the below shows GPT-4o vs. GPT-4o-mini across experiments in an agent evaluation setting.

Updated 2 days ago