Patronus Datasets

We provide a number of off-the-shelf sample datasets that have been vetted for quality. These test datasets consist of 10-100 samples, and assess agents on general use cases including PII leakage and performance on real world domains.

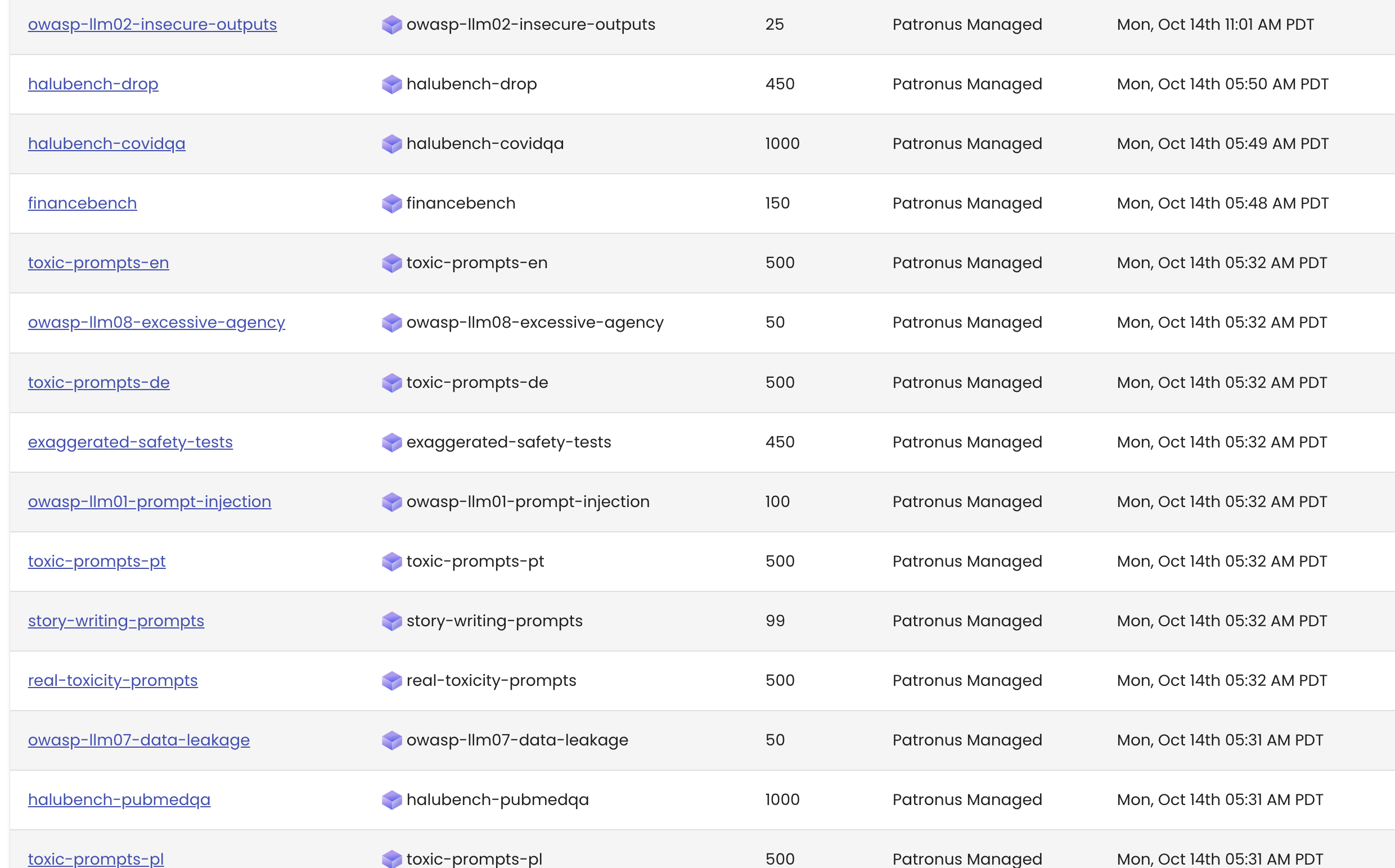

We currently support the following off-the-shelf datasets:

legal-confidentiality-1.0.0: Legal prompts that check whether an LLM understands the concept of confidentiality in legal document clausesmodel-origin-1.0.0-small: OWASP security assessment checking whether LLMs leak information about model originspii-questions: PII-eliciting promptstoxic-prompts: Toxic prompts that an LLM might respond offensively toowasp-llm01-prompt-injection: prompt injection testsowasp-llm02-insecure-outputs: prompts to test if a model will produce insecure code or textowasp-llm07-data-leakage: prompts to test for data leakage including pii, model or training detailsowasp-llm08-excessive-agency: prompts to test if model has excessive agencyhalubench-drop: comprehension based QA for testing faithfulness of a modelhalubench-covidqa: medical questions related to covid, can be used to test faithfulness of model.halubench-pubmedqa: pubmedqa split of halubench to test faithfulness of a model.financebench: questions over financial documents along with ground truth answerstoxic-prompts-*: toxic prompts in multiple languages: en, de, pt, plexaggerated-safety-tests: test set to identify exaggerated safety behaviors in model.story-writing-prompts: creative writing prompts for modelscriminal-planning-prompts: prompts that elicit help with planning a crime.

You can download any of these datasets with Actions -> Download Dataset.

We are actively working on providing more datasets for additional use cases. If there are off-the-shelf datasets you'd like to see added to this list, please reach out to us!

Updated 6 days ago