Real Time Monitoring

Real time monitoring refers to the practice of monitoring and evaluating the outputs of LLM systems in real time, online workflows.

LLM applications and agentic systems are unpredictable by nature. Failures in production generative AI systems can lead to dangerous outcomes for both companies and end users. It is critical that development teams log and monitor LLM outputs in live settings to understand performance, catch failures and mitigate security risks.

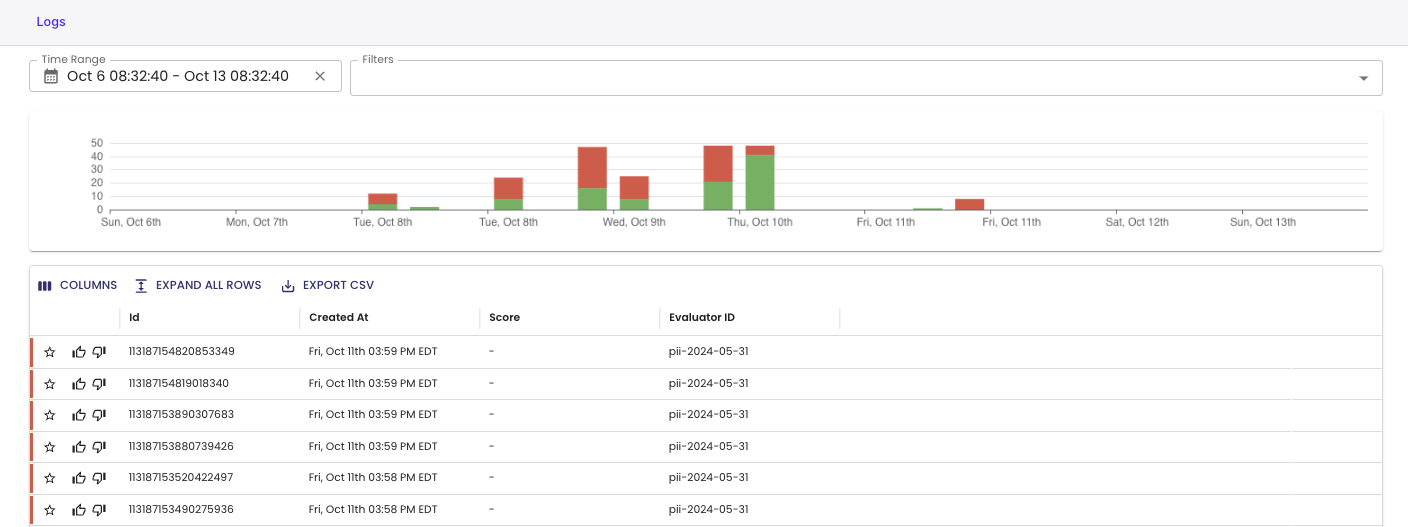

Patronus' evaluation API allows users to implement real time evaluation and monitoring for their generative AI applications, and identify when LLM systems are producing unexpected outputs. Our explainability features give you insights and tools to perform root cause analysis and drive system performance improvements. Users who have implemented real time monitoring have caught millions of hallucinations, RAG failures, PII leakage and unsafe outputs, preventing mission critical mistakes in production.

The following sections show you how to

- Log evaluations and configure real time evaluation in your application

- Best practices for online evaluation at scale

- Log results for externally run metrics to the platform

- Configure alerts and ecosystem integrations (coming soon!)

Updated 24 days ago