MongoDB Atlas

Create MongoDB MongoDB Atlas Cluster

MongoDB Atlas is a developer data platform providing a suite of cloud database and data services that accelerate and simplify how you build with data.

MongoDB offers a forever free Atlas cluster in the public cloud which can be done upon creating an account here. You can also follow the quickstart here to get your free Atlas cluster!

Get your Patronus AI API Key

You can create and get an API Key on the API Keys Page:

Install Patronus and LlamaIndex

We will be using the Patronus SDK in addition to LLamaIndex. LlamaIndex is a data framework that provides an interface for ingesting and indexing datasets, which integrates well with MongoDB’s Atlas data store.

pip install patronus llama_index

Configure the Document Store

from llama_index.node_parser import SimpleNodeParser

from llama_index.storage.docstore import MongoDocumentStore

nodes = SimpleNodeParser().get_nodes_from_documents(YOUR_DOCUMENTS)

# You can get this from your test deployment in the MongoDB interface

MONGODB_URI ="mongodb+srv://<username>:<password>@<cluster_identifier>.mongodb.net/?retryWrites=true&w=majority"

docstore = MongoDocumentStore.from_uri(uri=MONGODB_URI)

docstore.add_documents(nodes)

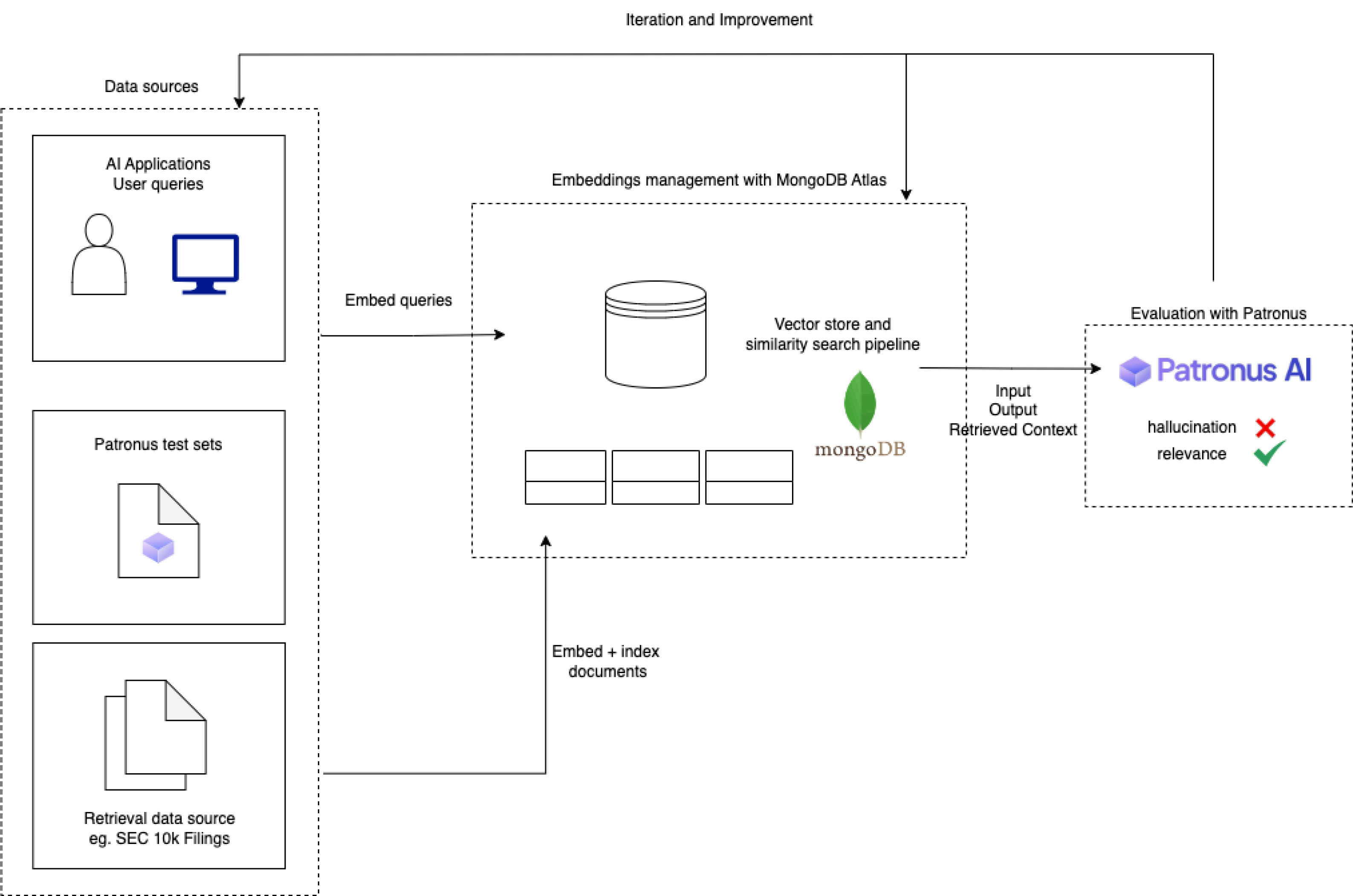

RAG System

Below is an overview of an example system:

Query the Retrieval System

We will have to first load a vector store:

from llama_index.storage.docstore import MongoDocumentStore

from llama_index.storage.index_store import MongoIndexStore

from llama_index.storage.storage_context import StorageContext

from llama_index import GPTVectorStoreIndex

docstore = MongoDocumentStore.from_uri(uri=MONGODB_URI, db_name="db_docstore")

storage_context = StorageContext.from_defaults(

docstore=MongoDocumentStore.from_uri(uri=MONGODB_URI, db_name="db_docstore"),

index_store=MongoIndexStore.from_uri(uri=MONGODB_URI, db_name="db_docstore"),

)

vector_index = GPTVectorStoreIndex(nodes, storage_context=storage_context)

Now we can get responses:

for INPUT in YOUR_INPUTS:

vector_response = vector_index.as_query_engine().query(user_query)

llm_output = vector_response.response

source_text = [x.text for x in vector_response.source_nodes]

Patronus Evaluators

Now let's use the SOTA Lynx hallucination detection evaluator from Patronus:

PATRONUS_API_KEY = "PREVIOUS_API_KEY"

headers = {

"Content-Type": "application/json",

"X-API-KEY": PATRONUS_API_KEY,

}

data = {

"criteria": [

{"criterion_id": "lynx"}

],

"input": sample["model_input"],

"retrieved_context": sample["retrieved_context"],

"output": sample["model_output"],

"explain": True

}

response = requests.post("<https://api.patronus.ai/v1/evaluate>", headers=headers, json=data)

response.raise_for_status()

results = response.json()["results"]

Now you have a functional RAG system! 🚀

Updated 6 days ago