Logging User Defined Scores (API)

Developers who are not using our Python SDK can import their evaluation results via our API. The endpoint to import evaluation results is POST /v1/evaluation-results/batch and you can see its documentation here. It is a batch API, so you can import multiple evaluation results at the same time.

Below is a sample cURL request for our batch logging endpoint.

curl --location 'https://api.patronus.ai/v1/evaluation-results/batch' \

--header 'Content-Type: application/json' \

--header 'X-API-KEY: ••••••' \

--data '{

"evaluation_results": [

{

"evaluator_id": "my-answer-relevance-evaluator",

"pass": true,

"score_raw": 0.92,

"evaluated_model_input": "Did apples ever inspire a scientist?",

"evaluated_model_output": "Yes! Sir Isaac Newton observed an apple fall from a tree which sparked his curiosity about why objects fall straight down and not sideways.",

"tags": {

"scientist": "Isaac Newton"

},

"app": "scientist-knowledge-model"

},

{

"evaluator_id": "my-context-relevance-evaluator",

"pass": false,

"score_raw": 0.1,

"evaluated_model_input": "My cat is my best friend. Do you feel that way about your cat?",

"evaluated_model_retrieved_context": [

"Dogs are man'\''s best friend"

],

"tags": {

"animals": "true"

}

}

]

}'

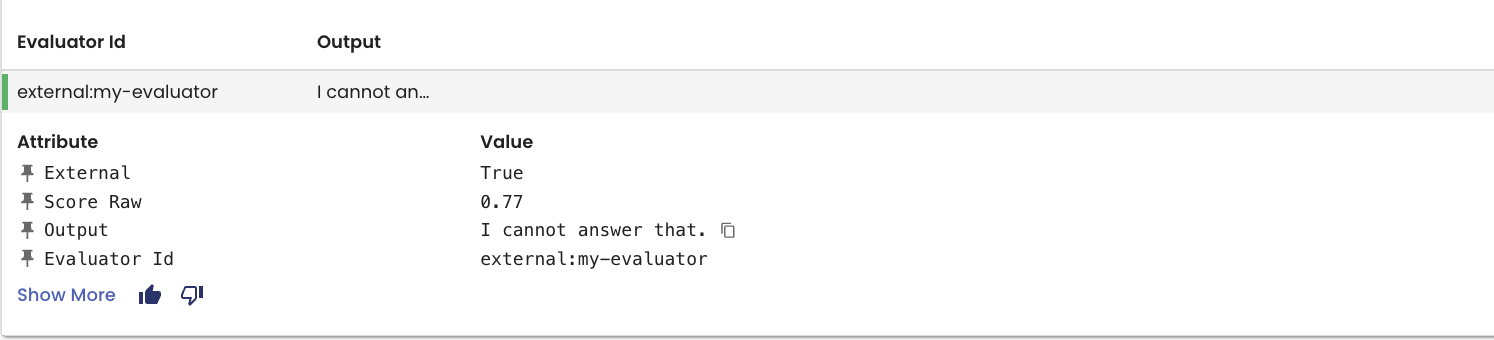

You can now view these eval logs in the Logs view!

Updated 7 days ago