Import External Evaluations

If you're running evaluations outside of the Patronus platform, you can bring them into the platform to get a consolidated view of your GenAI system.

Use the Logs and Comparisons features to understand your LLM's performance across all evaluations, whether they are run through Patronus or not.

The API to import evaluation results is POST /v1/evaluation-results/batch and you can see its documentation here. It is a batch API, so you can import multiple evaluation results at the same time.

All the features available to you through evaluations run through Patronus - like organizing evaluations into Apps, adding tags and filters, specifying raw scores and pass or fail - are available for imported evaluations as well. Below is a sample cURL request showcasing these features.

curl --location 'https://api.patronus.ai/v1/evaluation-results/batch' \

--header 'Content-Type: application/json' \

--header 'X-API-KEY: ••••••' \

--data '{

"evaluation_results": [

{

"evaluator_id": "my-answer-relevance-evaluator",

"pass": true,

"score_raw": 0.92,

"evaluated_model_input": "Did apples ever inspire a scientist?",

"evaluated_model_output": "Yes! Sir Isaac Newton observed an apple fall from a tree which sparked his curiosity about why objects fall straight down and not sideways.",

"tags": {

"scientist": "Isaac Newton"

},

"app": "scientist-knowledge-model"

},

{

"evaluator_id": "my-context-relevance-evaluator",

"pass": false,

"score_raw": 0.1,

"evaluated_model_input": "My cat is my best friend. Do you feel that way about your cat?",

"evaluated_model_retrieved_context": [

"Dogs are man'\''s best friend"

],

"tags": {

"animals": "true"

}

}

]

}'

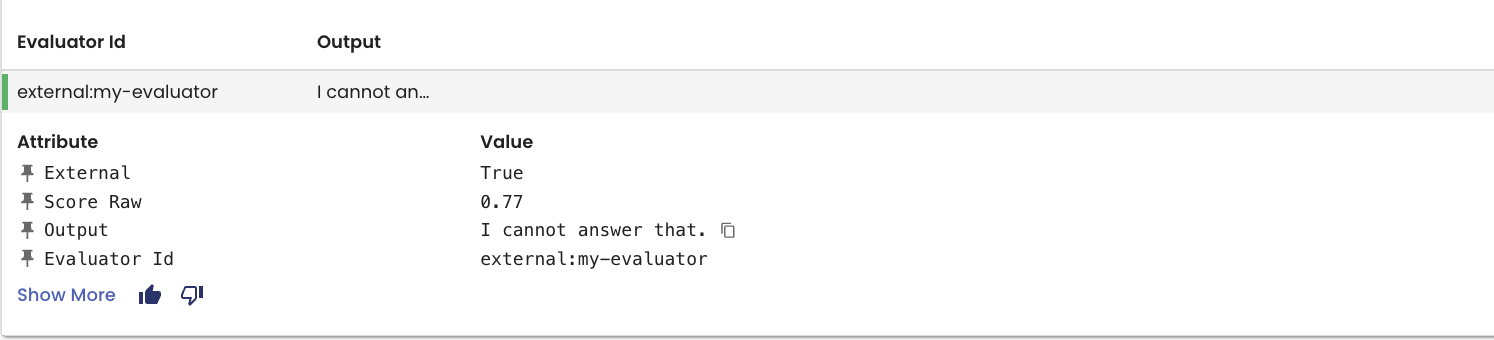

Imported evaluation results are distinguished from those generated through the Patronus platform in the following ways:

- The attribute

Externalis set toTrue - The

Evaluator Idis pre-pended with the keywordexternal:. This is so evaluator names for imported evaluations do not clash with those run through Patronus.

You can see both of these in Logs:

Updated 2 days ago