Failure Mode Clustering with Nomic Atlas

Patronus is a great way of determining whether the outputs from your LLM are correct. We can run those through a series of evaluators and return automated pass/fail results to you. This helps you quantify correctness and is the first step in improving the performance of your model.

If you want more actionable insights, one approach would be to export those results and dive deeper into what's going on. A great tool for that is Nomic Atlas which helps you visualize those results in an embedding map to understand semantic similarity between questions and answers. Our friends at Nomic did exactly that on our open-sourced hallucination benchmarking dataset HaluBench and wrote a great blog post about it here.

This quick tutorial uses a much more trivial dataset and shows you the step-by-step of how to generate results in Patronus, filter them in LLM Monitoring, export them to CSV, and easily upload them to Nomic Atlas to leverage its capabilities.

Generating Results

For the purpose of this demo, we will be running the script below using the associated dataset of 100 questions in JSONL format. These questions are taken from a FlexiQuiz blog post posted here. Each row has a prompt that we will feed into Llama 3.1 8B using Together API, a golden answer provided by FlexiQuiz, and a question category for tagging purposes. We then call the Patronus API and invoke the system:is-similar-to-gold-answer evaluator to check if the model output is similar in meaning to the golden answer stored as label.

{"text": "What is the main ingredient in guacamole?", "label": "Avocado", "category": "Food and drink"}

{"text": "Which country is famous for its pasta dishes such as spaghetti and lasagna?", "label": "Italy", "category": "Food and drink"}

{"text": "What is the primary ingredient in hummus?", "label": "Chickpeas (garbanzo beans)", "category": "Food and drink"}

{"text": "Which vegetable is known for its association with Popeye the Sailor?", "label": "Spinach", "category": "Food and drink"}

{"text": "What is the national dish of Japan?", "label": "Sushi", "category": "Food and drink"}

{"text": "What is the main ingredient in a traditional Greek salad?", "label": "Feta cheese", "category": "Food and drink"}

{"text": "What fruit is known as 'The King of Fruits' and has a distinct smell?", "label": "Durian", "category": "Food and drink"}

{"text": "What is the key ingredient in a margarita pizza?", "label": "Fresh mozzarella cheese", "category": "Food and drink"}

{"text": "What type of food is brie?", "label": "Cheese", "category": "Food and drink"}

{"text": "Which nut is used to make marzipan?", "label": "Almonds", "category": "Food and drink"}

{"text": "What is the largest ocean on Earth?", "label": "The Pacific Ocean", "category": "Oceans trivia"}

{"text": "Which ocean is located between Africa and Australia?", "label": "The Indian Ocean", "category": "Oceans trivia"}

{"text": "What percentage of the Earth's surface is covered by oceans?", "label": "Approximately 71%", "category": "Oceans trivia"}

{"text": "Which ocean is the saltiest?", "label": "The Atlantic Ocean", "category": "Oceans trivia"}

{"text": "What is the largest coral reef system in the world?", "label": "The Great Barrier Reef", "category": "Oceans trivia"}

{"text": "What is the process by which ocean water becomes vapor and rises into the atmosphere?", "label": "Evaporation", "category": "Oceans trivia"}

{"text": "What is the name of the scientific study of the oceans?", "label": "Oceanography", "category": "Oceans trivia"}

{"text": "Which ocean is known for its vibrant and diverse marine life, including the Great Barrier Reef?", "label": "The Pacific Ocean", "category": "Oceans trivia"}

{"text": "What is the process by which icebergs break off from glaciers and float into the ocean?", "label": "Calving", "category": "Oceans trivia"}

{"text": "What is the deepest point in the ocean?", "label": "The Mariana Trench", "category": "Oceans trivia"}

{"text": "Which country hosted the FIFA World Cup in 2010?", "label": "South Africa", "category": "2010's trivia"}

{"text": "Which social media platform became increasingly popular during the 2010s, known for its 140-character limit on posts?", "label": "Twitter", "category": "2010's trivia"}

{"text": "Which artist released the hit single 'Rolling in the Deep' in 2010?", "label": "Adele", "category": "2010's trivia"}

{"text": "What was the title of the first film in the Marvel Cinematic Universe released in 2010?", "label": "Iron Man 2", "category": "2010's trivia"}

{"text": "Which country won the most gold medals in the 2012 Summer Olympics held in London?", "label": "The United States", "category": "2010's trivia"}

{"text": "What was the name of the spacecraft that successfully landed on Mars in 2012?", "label": "Curiosity (Mars Rover)", "category": "2010's trivia"}

{"text": "Which country hosted the Olympic Games in 2016?", "label": "Brazil (Rio de Janeiro)", "category": "2010's trivia"}

{"text": "Which streaming platform gained popularity during the 2010s, known for its original shows like 'Stranger Things' and 'House of Cards'?", "label": "Netflix", "category": "2010's trivia"}

{"text": "Which artist released the song 'Uptown Funk' featuring Bruno Mars in 2014?", "label": "Mark Ronson", "category": "2010's trivia"}

{"text": "Which British TV series centered around a time-traveling alien celebrated its 50th anniversary in 2013?", "label": "Doctor Who", "category": "2010's trivia"}

{"text": "What is the largest land animal in the world?", "label": "The African elephant", "category": "Wildlife questions"}

{"text": "What is the fastest land animal?", "label": "The cheetah", "category": "Wildlife questions"}

{"text": "Which bird is known for its ability to mimic human speech?", "label": "The African grey parrot", "category": "Wildlife questions"}

{"text": "Which animal is known for its distinctive black and white stripes?", "label": "The zebra", "category": "Wildlife questions"}

{"text": "Which species of penguin lives in Antarctica?", "label": "The emperor penguin", "category": "Wildlife questions"}

{"text": "What is the smallest species of mammal?", "label": "The bumblebee bat", "category": "Wildlife questions"}

{"text": "What is the national bird of the United States?", "label": "The bald eagle", "category": "Wildlife questions"}

{"text": "Which animal is the symbol of wisdom in many cultures?", "label": "The owl", "category": "Wildlife questions"}

{"text": "What is the fastest marine animal?", "label": "The sailfish", "category": "Wildlife questions"}

{"text": "Which animal is known as the 'king of the jungle'?", "label": "The lion", "category": "Wildlife questions"}

{"text": "Who was the first person to walk on the moon?", "label": "Neil Armstrong", "category": "Famous personalities"}

{"text": "Which scientist formulated the theory of relativity?", "label": "Albert Einstein", "category": "Famous personalities"}

{"text": "Who is the author of the Harry Potter book series?", "label": "J.K. Rowling", "category": "Famous personalities"}

{"text": "Who was the famous civil rights leader who advocated for equal rights for African Americans?", "label": "Martin Luther King Jr.", "category": "Famous personalities"}

{"text": "Who was the lead vocalist of the band Queen?", "label": "Freddie Mercury", "category": "Famous personalities"}

{"text": "Who is the scientist known for his theory of evolution?", "label": "Charles Darwin", "category": "Famous personalities"}

{"text": "Who painted the famous artwork 'The Starry Night'?", "label": "Vincent van Gogh", "category": "Famous personalities"}

{"text": "Who is the legendary basketball player known as 'Air Jordan'?", "label": "Michael Jordan", "category": "Famous personalities"}

{"text": "Who was the first actor to portray James Bond in the film series?", "label": "Sean Connery", "category": "Famous personalities"}

{"text": "Who was the first woman to win a Nobel Prize?", "label": "Marie Curie", "category": "Famous personalities"}

{"text": "In the TV show 'Friends,' which character famously says, 'How you doin'?'?", "label": "Joey Tribbiani", "category": "TV shows"}

{"text": "What is the fictional city where 'The Simpsons' is set?", "label": "Springfield", "category": "TV shows"}

{"text": "Who is the main character in the TV show 'Breaking Bad'?", "label": "Walter White", "category": "TV shows"}

{"text": "What is the name of the high school in the TV show 'Glee'?", "label": "William McKinley High School", "category": "TV shows"}

{"text": "What is the name of the fantasy drama series based on George R.R. Martin's novels?", "label": "'Game of Thrones'", "category": "TV shows"}

{"text": "Who plays the character Eleven in the TV show 'Stranger Things'?", "label": "Millie Bobby Brown", "category": "TV shows"}

{"text": "Which TV show follows the lives of a group of doctors at Grey Sloan Memorial Hospital?", "label": "'Grey's Anatomy'", "category": "TV shows"}

{"text": "Which TV show features a group of survivors trying to navigate a post-apocalyptic world?", "label": "'The Walking Dead'", "category": "TV shows"}

{"text": "What is the name of the motel the Rose family stays at in 'Schitt's Creek'?", "label": "The Rosebud Motel", "category": "TV shows"}

{"text": "Which TV show holds the record for the most Emmy Awards won by a scripted series?", "label": "'Game of Thrones'", "category": "TV shows"}

{"text": "Who holds the record for the most career home runs in Major League Baseball?", "label": "Barry Bonds (with 762 home runs)", "category": "Baseball"}

{"text": "What is the name of the oldest active baseball stadium in the United States?", "label": "Fenway Park (home of the Boston Red Sox)", "category": "Baseball"}

{"text": "Who was the first African American player in Major League Baseball?", "label": "Jackie Robinson", "category": "Baseball"}

{"text": "What is the name of the annual championship series in Major League Baseball?", "label": "The World Series", "category": "Baseball"}

{"text": "What is the term for hitting a home run when all bases are occupied, resulting in four runs?", "label": "Grand Slam", "category": "Baseball"}

{"text": "Which pitcher threw a perfect game in the World Series?", "label": "Don Larsen (in Game 5 of the 1956 World Series)", "category": "Baseball"}

{"text": "Which team holds the record for the most career strikeouts by a pitcher in Major League Baseball?", "label": "New York Yankees (with 27 championships)", "category": "Baseball"}

{"text": "Who won the World Series in the 2020 MLB season?", "label": "Los Angeles Dodgers", "category": "Baseball"}

{"text": "In 2021, which team had the longest winning streak in the regular season, with 22 consecutive wins?", "label": "Cleveland Guardians", "category": "Baseball"}

{"text": "Which player hit the most home runs in the 2020 MLB season?", "label": "Luke Voit (New York Yankees) with 22 home runs", "category": "Baseball"}

{"text": "What is the largest planet in our solar system?", "label": "Jupiter", "category": "Space"}

{"text": "What is the name of the closest star to Earth?", "label": "The Sun", "category": "Space"}

{"text": "What is the name of the galaxy that contains our solar system?", "label": "The Milky Way", "category": "Space"}

{"text": "Which planet is known as the 'Red Planet'?", "label": "Mars", "category": "Space"}

{"text": "What is the name of the largest moon in our solar system?", "label": "Ganymede (a moon of Jupiter)", "category": "Space"}

{"text": "Which space probe was launched in 1977 and has now left our solar system?", "label": "Voyager 1", "category": "Space"}

{"text": "What is the term for a massive explosion that marks the death of a high-mass star?", "label": "Supernova", "category": "Space"}

{"text": "What is the process by which light is produced in the Sun and other stars?", "label": "Nuclear fusion", "category": "Space"}

{"text": "What is the name of the spacecraft developed by SpaceX that is designed to transport astronauts to and from the ISS?", "label": "Crew Dragon", "category": "Space"}

{"text": "Who is the founder and CEO of SpaceX?", "label": "Elon Musk", "category": "Space"}

{"text": "Which European city is known as the 'City of Love'?", "label": "Paris", "category": "Europe"}

{"text": "Which European country is famous for its tulips, windmills, and wooden shoes?", "label": "The Netherlands", "category": "Europe"}

{"text": "Which country is home to the famous Neuschwanstein Castle, said to be the inspiration for Disney's Sleeping Beauty castle?", "label": "Germany", "category": "Europe"}

{"text": "Which European country is divided into two parts by the Bosphorus Strait?", "label": "Turkey (Istanbul)", "category": "Europe"}

{"text": "Which country is famous for its canals and gondolas?", "label": "Italy (Venice)", "category": "Europe"}

{"text": "Which European city is home to the Acropolis, a UNESCO World Heritage Site featuring the Parthenon?", "label": "Athens, Greece", "category": "Europe"}

{"text": "Which country in Europe is known for its iconic Blue Mosque and Grand Bazaar?", "label": "Turkey", "category": "Europe"}

{"text": "Which European country is home to the Guinness Storehouse, where the famous Irish stout is brewed?", "label": "Ireland", "category": "Europe"}

{"text": "Which country is known for its delicious Swiss chocolate and watches?", "label": "Switzerland", "category": "Europe"}

{"text": "Which country is the birthplace of the legendary band, The Beatles?", "label": "United Kingdom (specifically Liverpool, England)", "category": "Europe"}

{"text": "What is the alter ego of Batman?", "label": "Bruce Wayne", "category": "Superheroes"}

{"text": "What is the real name of Captain America?", "label": "Steve Rogers", "category": "Superheroes"}

{"text": "Who is the arch-nemesis of Spider-Man?", "label": "Professor Charles Xavier (Professor X)", "category": "Superheroes"}

{"text": "Which superhero is known as the 'Man of Steel'?", "label": "Superman", "category": "Superheroes"}

{"text": "What is the real name of Wonder Woman?", "label": "Diana Prince", "category": "Superheroes"}

{"text": "Which superhero is the founding member of the Justice League?", "label": "Batman", "category": "Superheroes"}

{"text": "Which superhero is known for his incredible speed?", "label": "The Flash (Barry Allen)", "category": "Superheroes"}

{"text": "Who is the mutant superheroine with the ability to control weather patterns in the X-Men comics?", "label": "Storm (Ororo Munroe)", "category": "Superheroes"}

{"text": "Which female superhero is a skilled spy and master of espionage in the Marvel universe?", "label": "Black Widow (Natasha Romanoff)", "category": "Superheroes"}

{"text": "Who is the strongest Avenger?", "label": "The Hulk (Bruce Banner)", "category": "Superheroes"}

Be sure to download the dataset before trying to run the script since it's a dependency and save it to the same directory as a file named 100-trivia-questions.jsonl.

import json

import pandas as pd

import requests

from together import Together

from nomic import atlas

PATRONUS_API_KEY = "YOUR_PATRONUS_KEY"

TOGETHER_API_KEY = "YOUR_TOGETHER_KEY"

# Use `nomic login YOUR_NOMIC_KEY` to login to the CLI

samples = []

with open("../sample-datasets/100-trivia-questions.jsonl", "r") as f:

for line in f:

samples.append(json.loads(line))

headers = {

"Content-Type": "application/json",

"X-API-KEY": PATRONUS_API_KEY,

}

client = Together(api_key=TOGETHER_API_KEY)

pass_counts = {}

rows = []

for i, sample in enumerate(samples[:20]):

completion = client.chat.completions.create(

model="meta-llama/Meta-Llama-3.1-8B-Instruct-Turbo",

messages=[

{"role": "system", "content": "Answer the following question succinctly:"},

{"role": "user", "content": sample["text"]},

],

)

sample["model_output"] = completion.choices[0].message.content

data = {

"evaluators": [

{

"evaluator_id": "custom-small",

"profile_name": "system:is-similar-to-gold-answer",

},

],

"evaluated_model_input": sample["text"],

"evaluated_model_output": sample["model_output"],

"evaluated_model_gold_answer": sample["label"],

"app": "test",

"tags": {"category": sample["category"]},

}

response = requests.post(

"https://api.patronus.ai/v1/evaluate", headers=headers, json=data

)

response.raise_for_status()

results = response.json()["results"]

for result in results:

id = str(

result["evaluation_result"]["id"]

if "id" in result["evaluation_result"]

else i

)

profile_name = result["profile_name"]

passed = result["result"]["pass"]

if passed:

pass_counts[profile_name] = pass_counts.get(profile_name, 0) + 1

row = {

"id": id,

"input": sample["text"],

"output": sample["model_output"],

"gold_answer": sample["label"],

"profile_name": profile_name,

"passed": passed,

}

rows.append(row)

print(pass_counts)

df = pd.DataFrame(rows)

# df.to_csv("nomic-export-evaluation-results.csv", index=False)

# df = pd.read_csv("nomic-export-evaluation-results.csv")

dataset = atlas.map_data(

identifier="100-trivia-questions",

data=df,

is_public=True,

id_field="id",

indexed_field="input",

)

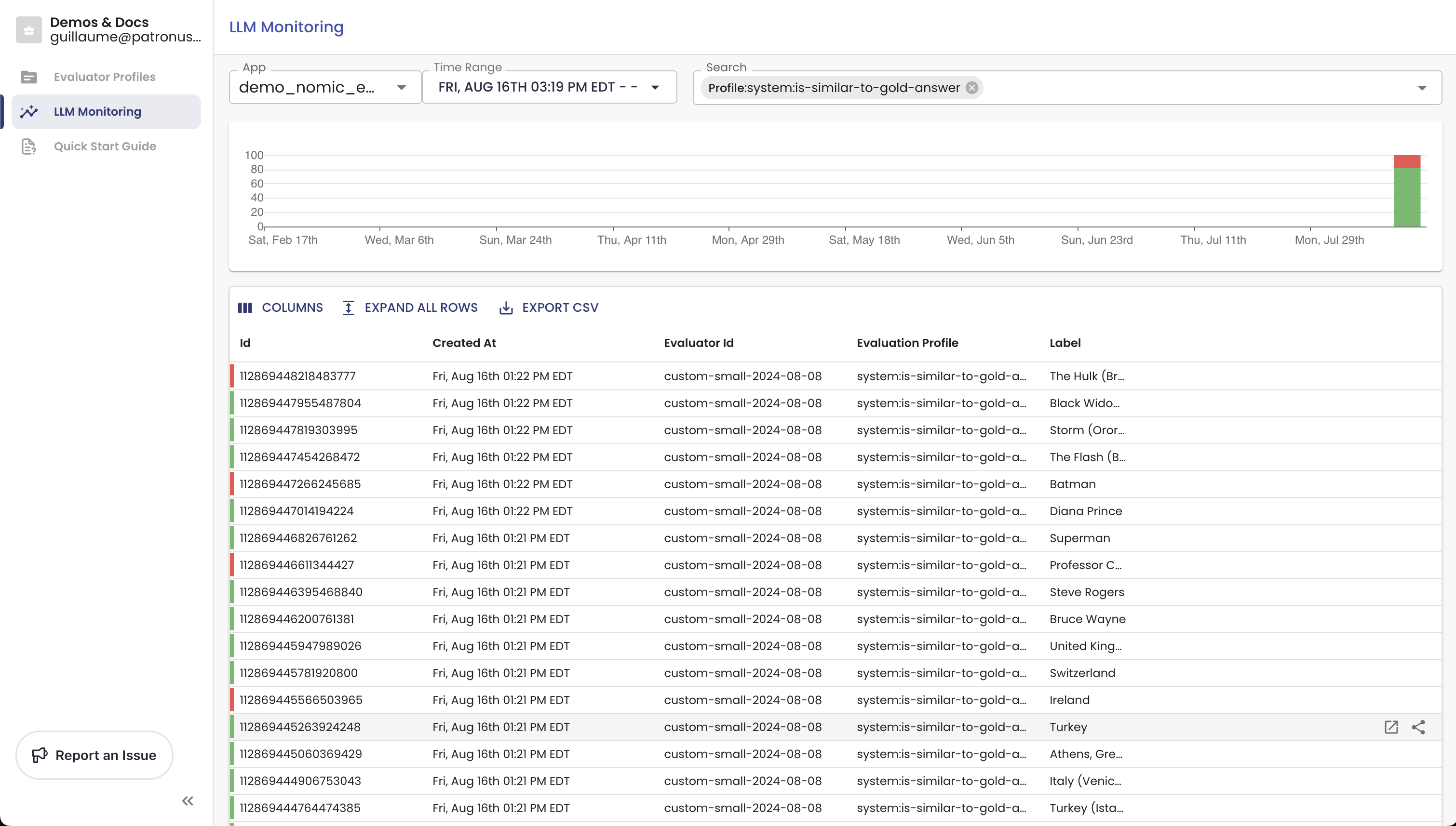

With the dataset and the script ready to go, we can execute it. Just remember to swap in two API keys (the PATRONUS_API_KEY and the TOGETHER_API_KEY) and login to the Nomic CLI using nomic login NOMIC_API_KEY. You of course do not need to use Together AI if you have another model you'd like to work with. After running the script with logging capture on, you should be able to see all the results via LLM Monitoring as shown below.

LLM Monitoring view filtering results using the relevant evaluator profile

LLM Monitoring is a great way to filter for results you're interested in and open up specific evaluation results to understand more about it including its metadata. It's a powerful view and we're working on expanding what you can do with it. There are of course other things you might want to be able to do with this data and that's why you can actually export any set of results to CSV with the click of a button.

Option #1: Use the Python Nomic SDK to Upload the Dataset

Now let's use Nomic Atlas to do Failure Mode Clustering. We can leverage Atlas' capabilities to visualize the prompts we fed into our model and understand if there are specific types of questions that Llama 3.1 8B tends to fail on. We'll plot points using the embedding space around the evaluated_model_input since this will give us an idea if similar topics of questions tend to mess up our model more than others.

The script creates a Pandas DataFrame as the results come in from Patronus' API. Those results can then be uploaded directly to Nomic Atlas using the Nomic Python SDK as shown in the code. It's pretty seamless and you can then view the results via the Nomic Atlas Dashboard.

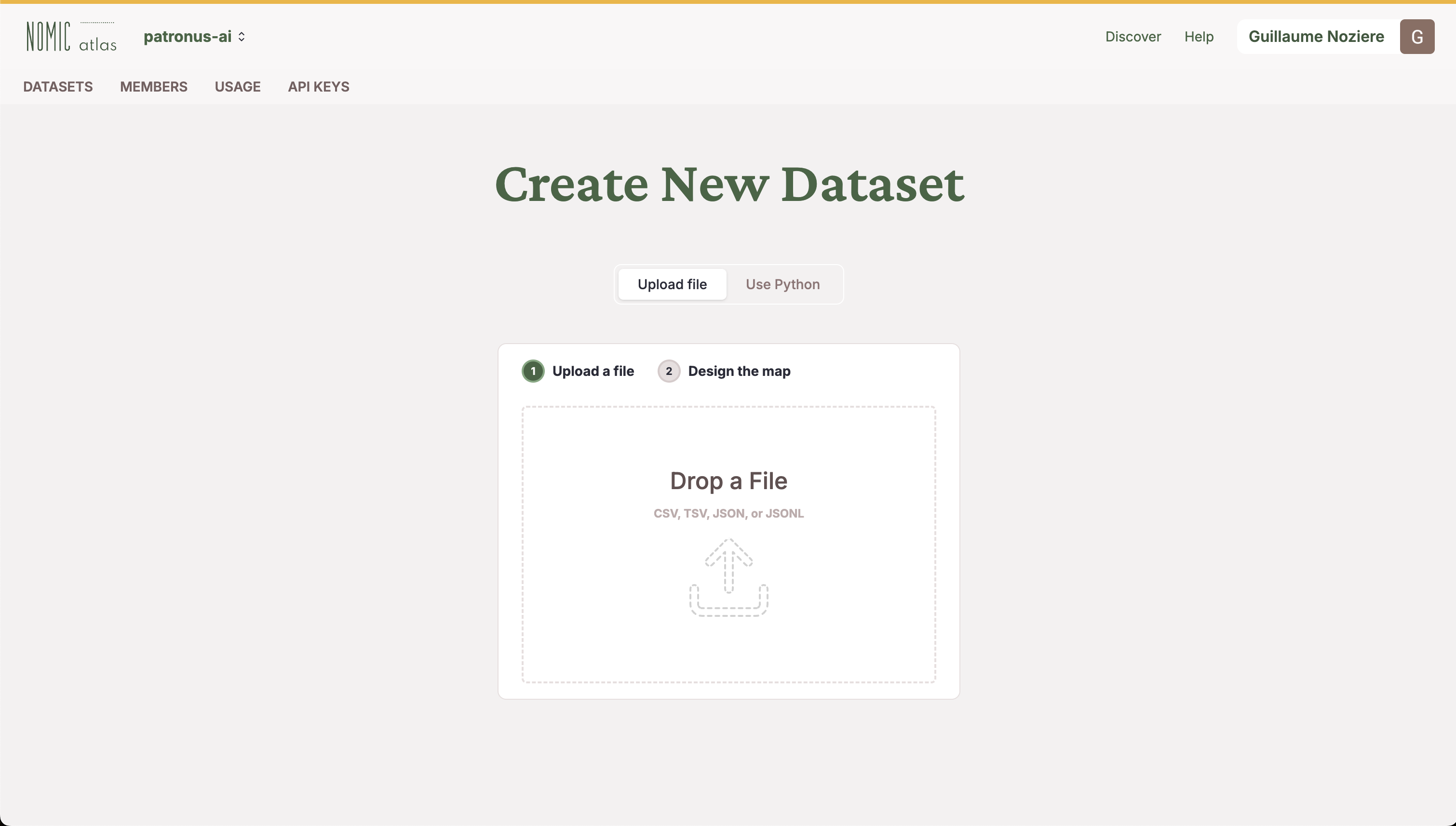

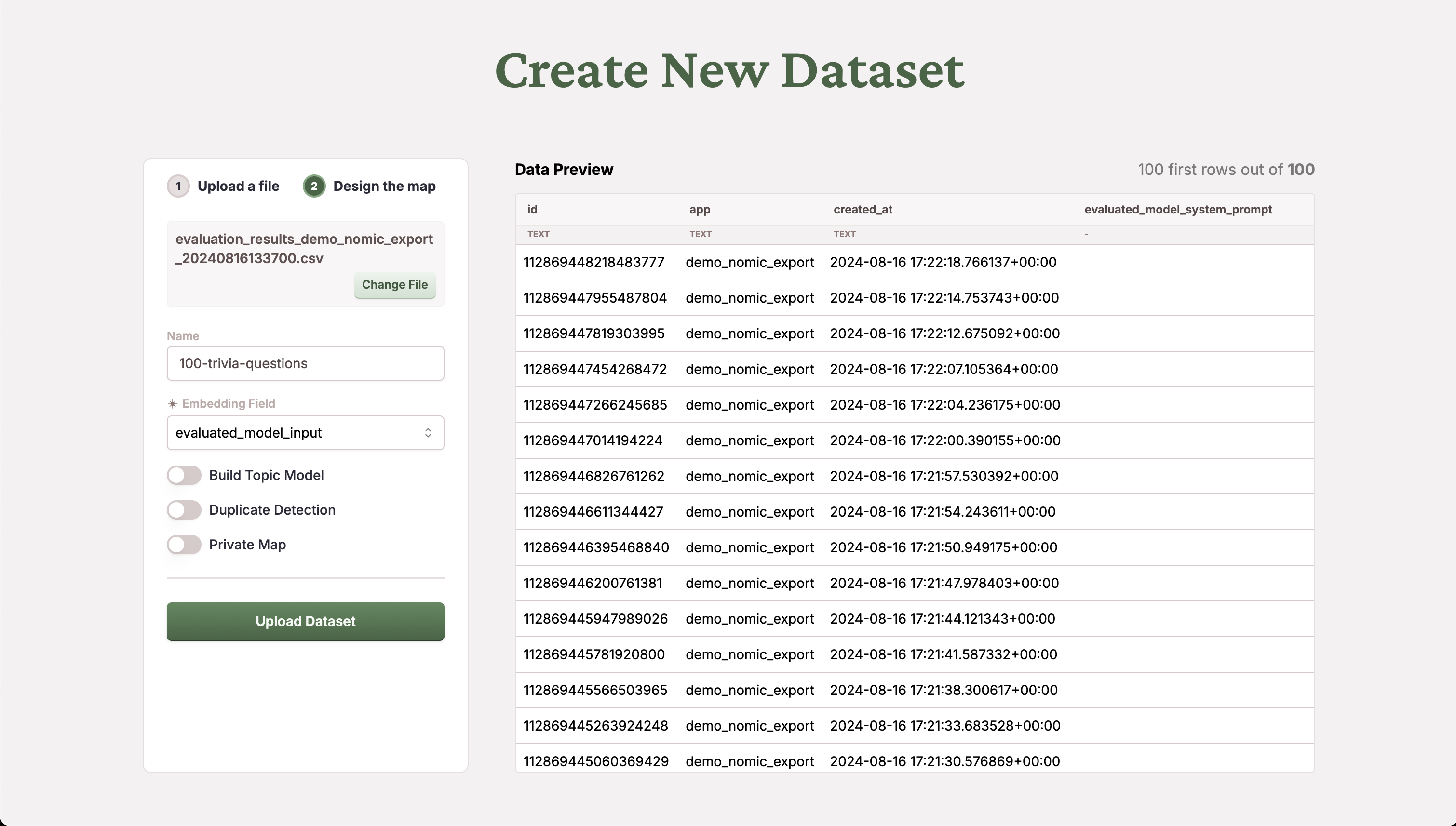

Option #2: Upload the Dataset on the Dashboard with the Results CSV

The first step is to access your Dashboard and create a new dataset. You'll be asked to upload it in whatever format suits you best including CSV. That's great news for us since the data we exported from Patronus is already in CSV and can be uploaded directly into Nomic Atlas without needing to make a single format modification.

Be sure to choose the evaluated_model_input as the embedding column if you want to follow along. You can of course select something else if you'd like to investigate something different. It's often worth visualizing the same dataset using a variety of different embedding fields to better understand what's going on.

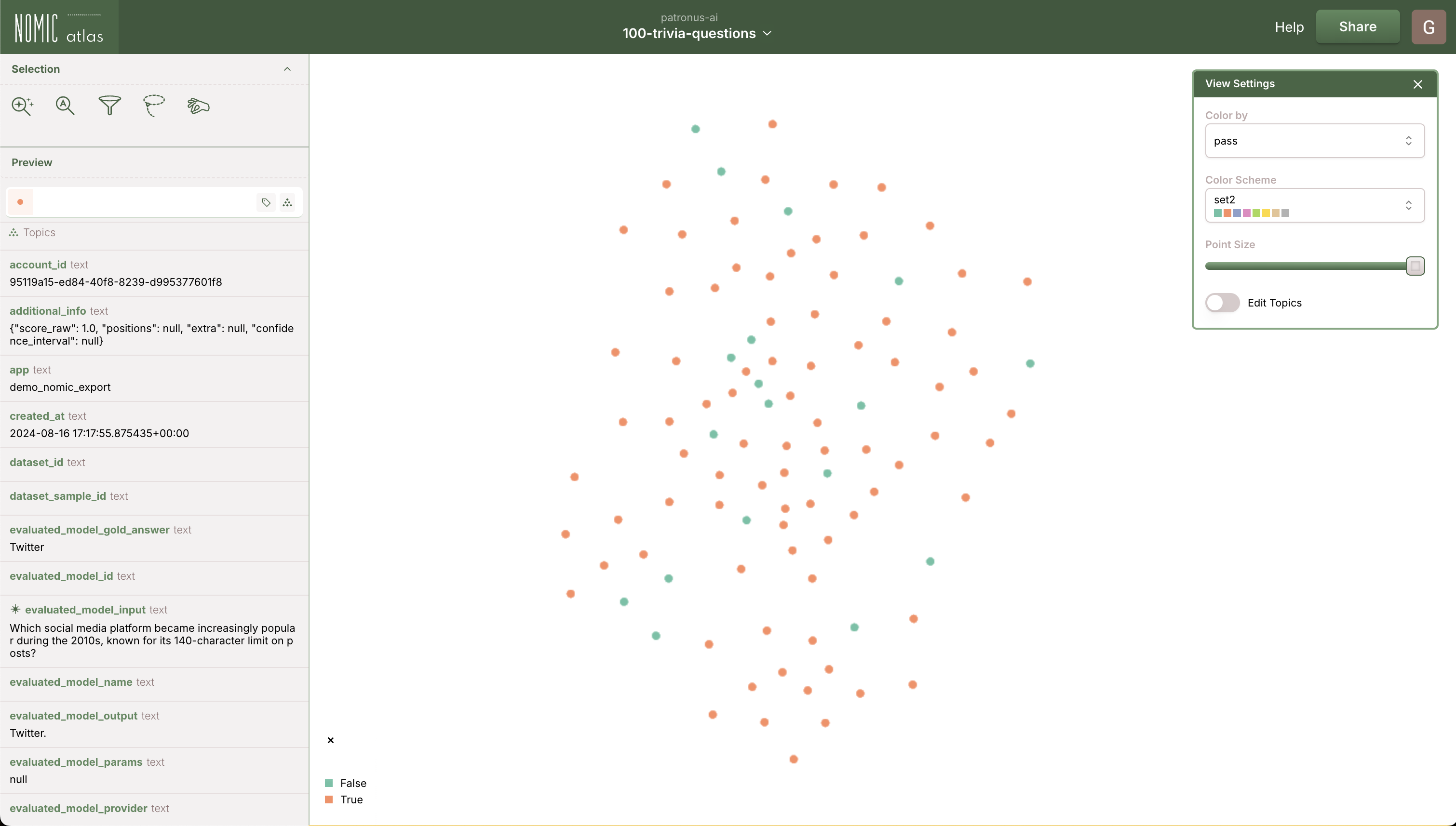

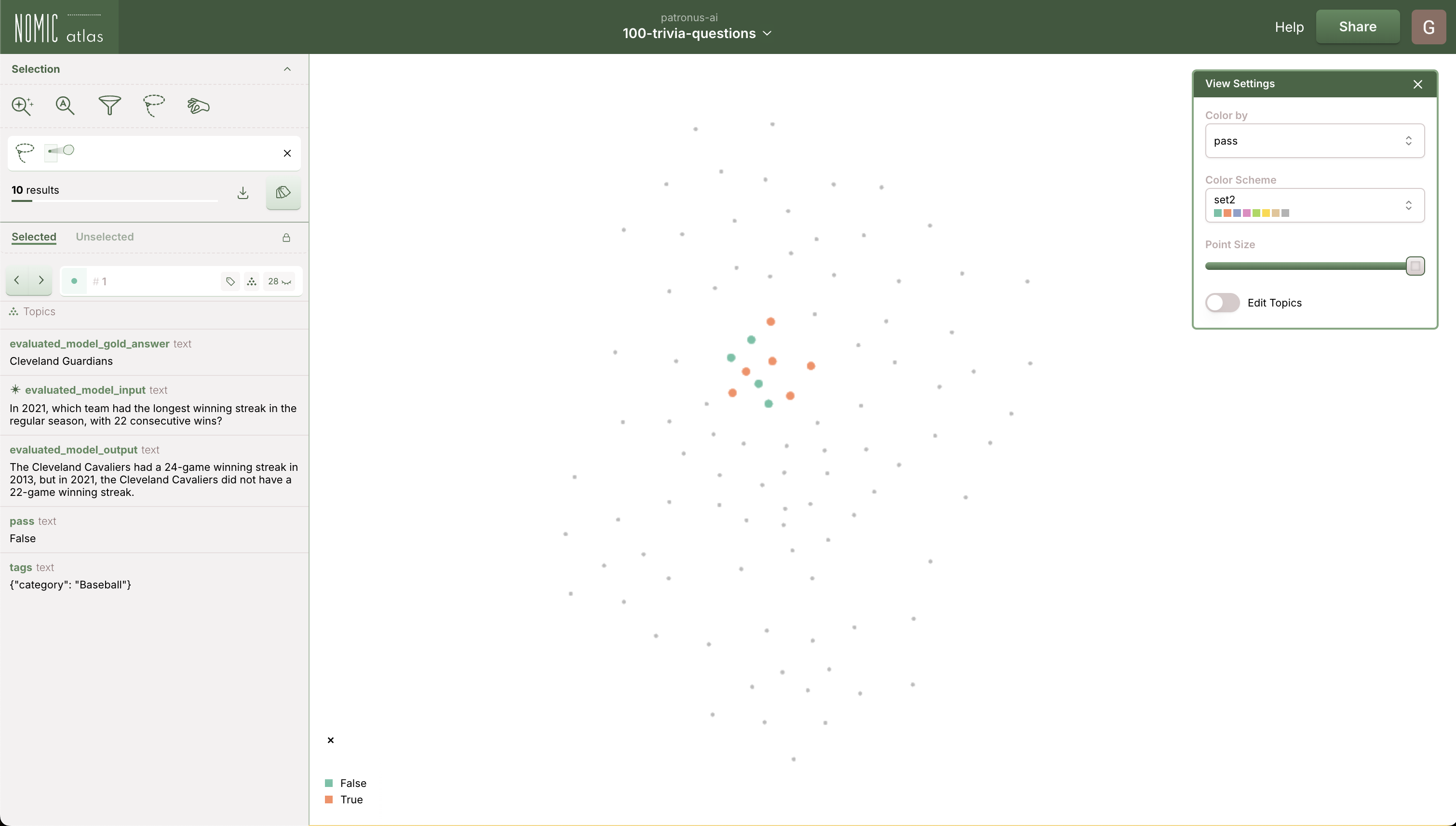

Viewing the Nomic Atlas Map

Nomic will take a bit of time to process the uploaded dataset and create a map based on the embedding column we gave it. You'll get an email once it's ready to go. At that point, you can click into the Map and see what's going on. We choose to color the points based on the pass field because we're trying to understand if questions that our model failed to answer correctly (i.e., the model output was different in meaning to the golden label we provided originally) are on similar topics. Below is the visualization we get with False results colored in green.

The 100 question trivia dataset visualized in Nomic Atlas here with True in orange and False in green

Now it looks like we've got some failures sprinkled across the embedding space but most of the results tend to be correct. That's good news for the LLM. There is one area in particular though where our model does seem to be messing up. We can use the Lasso tool to focus on that area and see what kinds of questions are problematic to our model. Those will be brought into focus and help us get to the bottom of this high failure rate cluster.

Looks like these questions are all around baseball trivia knowledge

A Few Failed Examples

Let's pull out a few examples of failures to see what's going on.

prompt: "Which player hit the most home runs in the 2020 MLB season?"

answer: "Kyle Schwarber hit 11 home runs in the 2020 MLB season, which was the most in the league that year."

label: "Luke Voit (New York Yankees) with 22 home runs"

Looking at the first question, it seems our model answer Kyle Schwarber although the correct answer is actually Luke Voit. Seems like it's hallucinating and we caught it which is good. We should think about how to reduce hallucinations by either feeding in context or some other approach.

prompt: "Which team holds the record for the most career strikeouts by a pitcher in Major League Baseball?"

answer: "The team that holds the record for the most career strikeouts by a pitcher in Major League Baseball is the Chicago Cubs."

label: "New York Yankees (with 27 championships)"

The second question is a bit confusing. It's asking us to identify which team holds a record for career strikeouts which is inherently an individual's record. Not sure how valuable of an answer this truly is. The other issue is that the golden answer states the New York Yankees are that team and then provide 27 championships as additional information to answer the question which is unrelated and therefore not useful. Seems like this is more of a data quality issue than a model mistake.

It highlights the importance of having incredibly high data quality when using benchmarking datasets so you can actually rely on the computed performance metrics. Good news is that's something Patronus can help with so you don't need to pull random trivia question datasets from Internet blog sites.

prompt: "In 2021, which team had the longest winning streak in the regular season, with 22 consecutive wins?"

answer: "The Cleveland Cavaliers had a 24-game winning streak in 2013, but in 2021, the Cleveland Cavaliers did not have a 22-game winning streak."

label: "Cleveland Guardians"

The third question is more specific and therefore easier to verify. The issue here is the golden answer is wrong since the longest MLB streak that season was held by the St. Louis Cardinals with 17 games won in a row. The second issue is that the question doesn't specify which sports we're talking about and without context the model assumed basketball instead of baseball.

Conclusion

In conclusion, it seems like the model isn't actually to blame for most of these failures. We ended up copying down a problematic benchmarking dataset from some unverified information source and identifying inconsistencies and inaccuracies within it.

By combining the power of Patronus evaluators with Nomic Atlas' visualization capabilities, we've come to this realization and can now decide on the right next steps. Seems like we need to fix our dataset first, rerun the model failure analysis, and then decide where our model's weaknesses still lie. We did confirm that the model did hallucinate on at least one occasion so it's worth keeping an eye out for more of those.

Updated 2 days ago