Comparing Performance Snapshots

Estimated Time: 3 minutes

Our Comparisons feature allows you to compare LLM performance on different configurations and experiments. A Performance Snapshot is an evaluation summary report for a specific LLM configuration.

1. Create a Performance Snapshot

To create a new performance snapshot, you need to have some results logged. If not, go ahead and run experiments from our quick start guide.

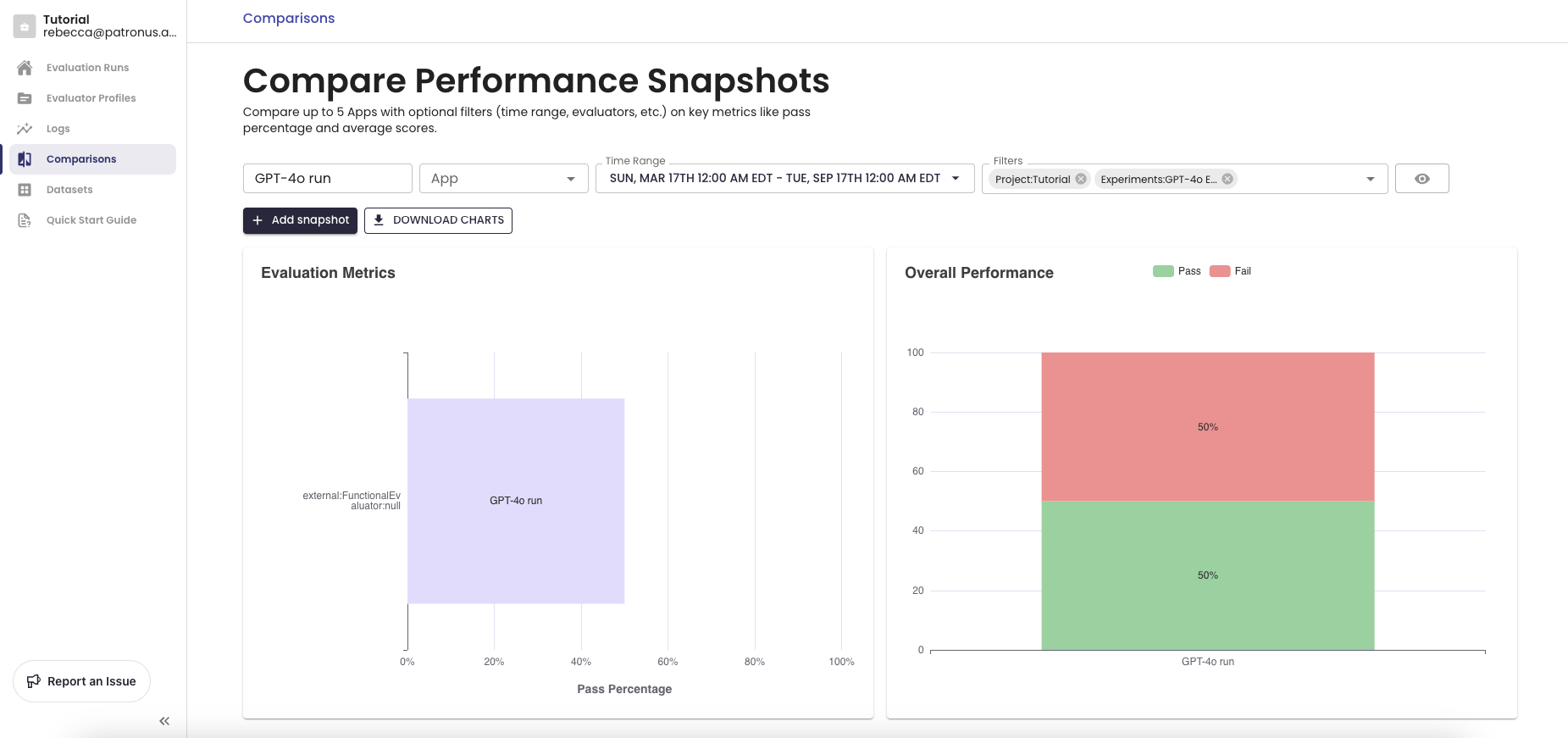

Start using the Comparisons feature by navigating to the Comparisons tab, or click here.

Click on "Search" to set filters that describe what you want to compare. Note that you must select a project ID. Examples of filters include:

- time range

- evaluation criteria

- tags

- scores

- projects

- experiments

- datasets

2. Generate Comparisons

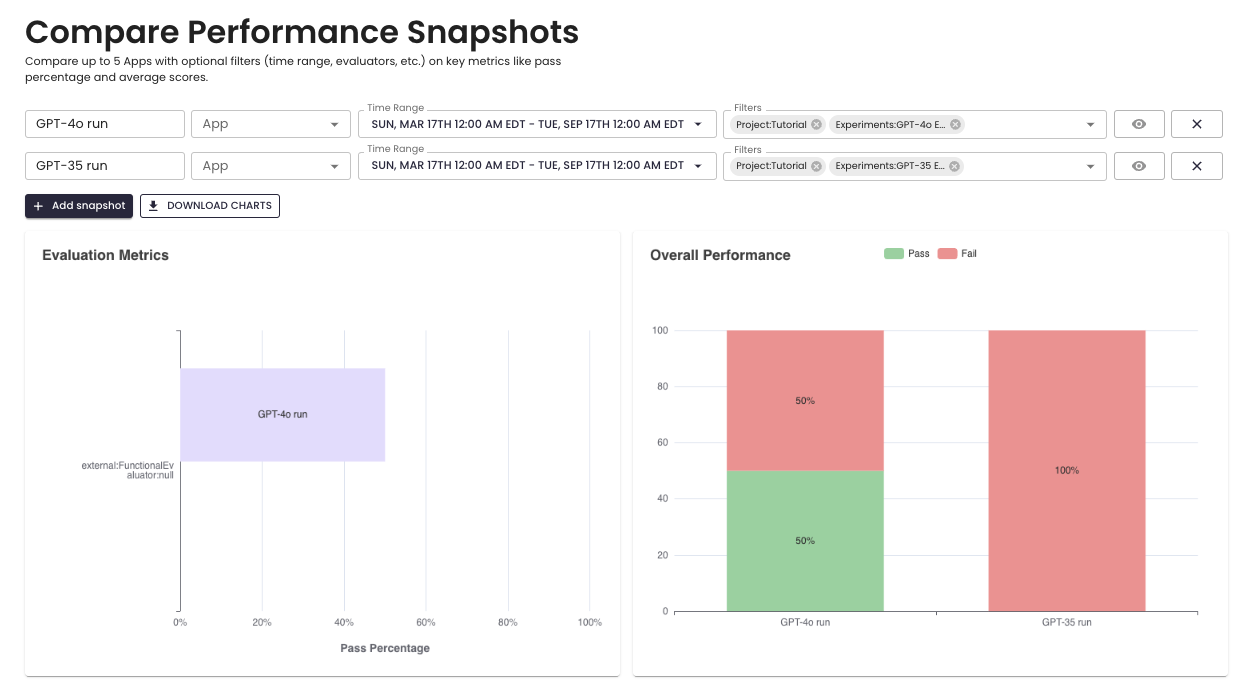

When you add multiple performance snapshots, you can see their performance side-by-side. Use the side-by-side view to determine the best LLM for your GenAI application and track changes in LLM performance over time.

To compare with another Performance Snapshot, click on "Add snapshot". In the example below, we're comparing an experiment running GPT-4o against GPT-3.5.

You can see the total pass and fail percentages on evaluations and a breakdown of exactly which evaluators performed poorly. You can give your performance snapshots descriptive names and add up to 5 snapshots at once.

3. Exporting Results

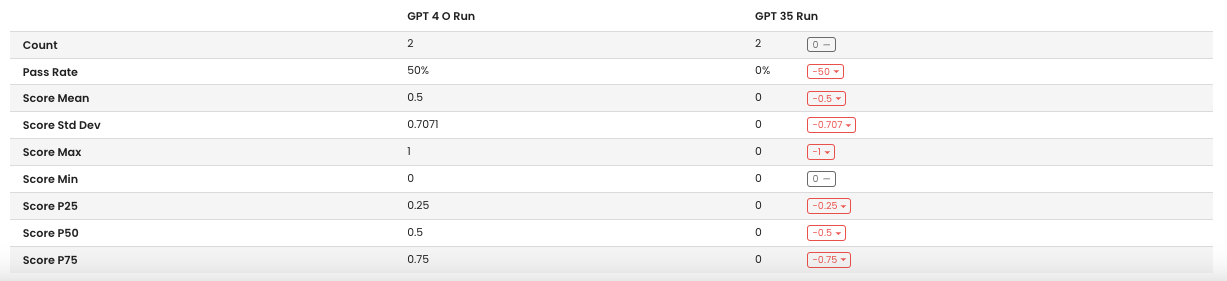

The comparisons view shows you charts containing metrics visualizations as well as aggregate statistics and performance deltas. You can download these charts and results as PDF, which will contain an evaluation summary report for your performance snapshots. You can share this with your team to make informed business decisions!

Updated 2 days ago