Using Evaluators

Estimated Time: 7 mins

How to use Evaluators

Evaluators are at the heart the Patronus Evaluation API. In this guide you'll learn about the diversity of Patronus evaluators and how to use them to score AI outputs against a broad set of criteria.

You can pass a list of evaluators to each evaluation API request. The evaluator name must be provided in the "evaluator" field. For example, run the following to query Lynx:

curl --location 'https://api.patronus.ai/v1/evaluate' \

--header 'X-API-KEY: YOUR_API_KEY' \

--header 'Content-Type: application/json' \

--data '{

"evaluators": [

{

"evaluator": "retrieval-hallucination-lynx",

"explain_strategy": "always"

}

],

"evaluated_model_input": "Who are you?",

"evaluated_model_output": "My name is Barry.",

"evaluated_model_retrieved_context": ["I am John."],

"tags": {"experiment": "quick_start_tutorial"}

}'

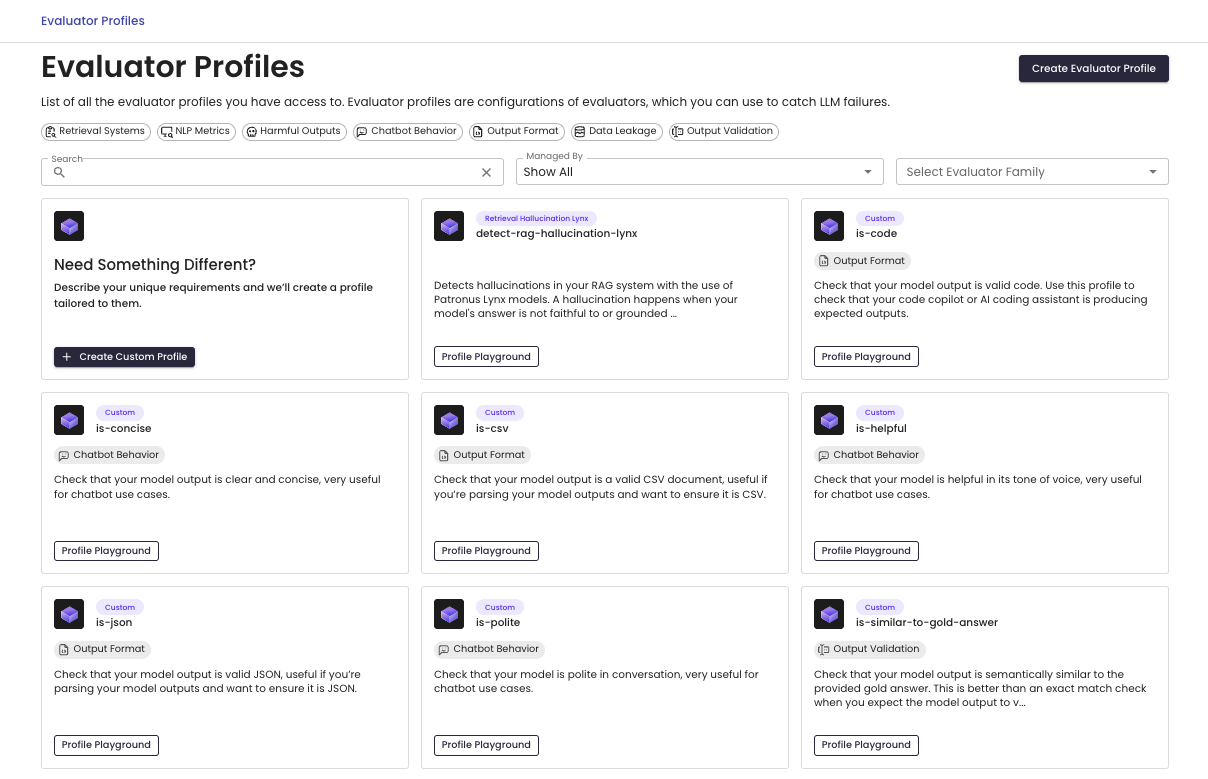

What Evaluators are supported?

Patronus supports a suite of high quality evaluators in our evaluation API. To use any of these evaluators, simply put the evaluator family name in the "evaluator" field in the above code snippet.

| Evaluator Family | Definition | Required Fields | Score Type |

|---|---|---|---|

| phi | Checks for protected health information (PHI), defined broadly as any information about an individual's health status or provision of healthcare. | evaluated_model_output | Binary |

| pii | Checks for personally identifiable information (PII). PII is information that, in conjunction with other data, can identify an individual. | evaluated_model_output | Binary |

| toxicity | Checks output for abusive and hateful messages. | evaluated_model_output | Continuous |

| retrieval-hallucination-lynx | Checks whether the LLM response is hallucinatory, i.e. the output is not grounded in the provided context. | evaluated_model_inputevaluated_model_outputevaluated_model_retrieved_context | Binary |

| retrieval-answer-relevance | Checks whether the answer is on-topic to the input question. Does not measure correctness. | evaluated_model_inputevaluated_model_output | Binary |

| retrieval-context-relevance | Checks whether the retrieved context is on-topic to the input. | evaluated_model_inputevaluated_model_retrieved_context | Binary |

| retrieval-context-sufficiency | Checks whether the retrieved context is sufficient to generate an output similar in meaning to the label. The label should be the correct evaluation result. | evaluated_model_inputevaluated_model_retrieved_contextevaluated_model_outputevaluated_model_gold_answer | Binary |

| metrics | Computes common NLP metrics on the output and label fields to measure semantic overlap and similarity. Currently supports bleu and rouge metrics. | evaluated_model_outputevaluated_model_gold_answer | Continuous |

| custom | Checks against custom criteria definitions, such as "MODEL OUTPUT should be free from brackets." LLM based and uses active learning to improve the criteria definition based on user feedback. | evaluated_model_inputevaluated_model_outputevaluated_model_gold_answer | Binary |

If you'd like to create your own evaluator, read the following sections on custom evaluators.

Updated 2 months ago