Human Annotations

Human annotations are an important step in any AI evaluation system. Human reviewed scores on AI system outputs can come from engineers, domain experts (either in-house or external), or even the product's end users. Our human annotations enable you to do the following

- Human review AI system outputs in experiments or live monitoring

- Validate agreement of automated metrics, such as LLM judges or Patronus evaluators

- Store comments on logs

- Create more challenging test datasets

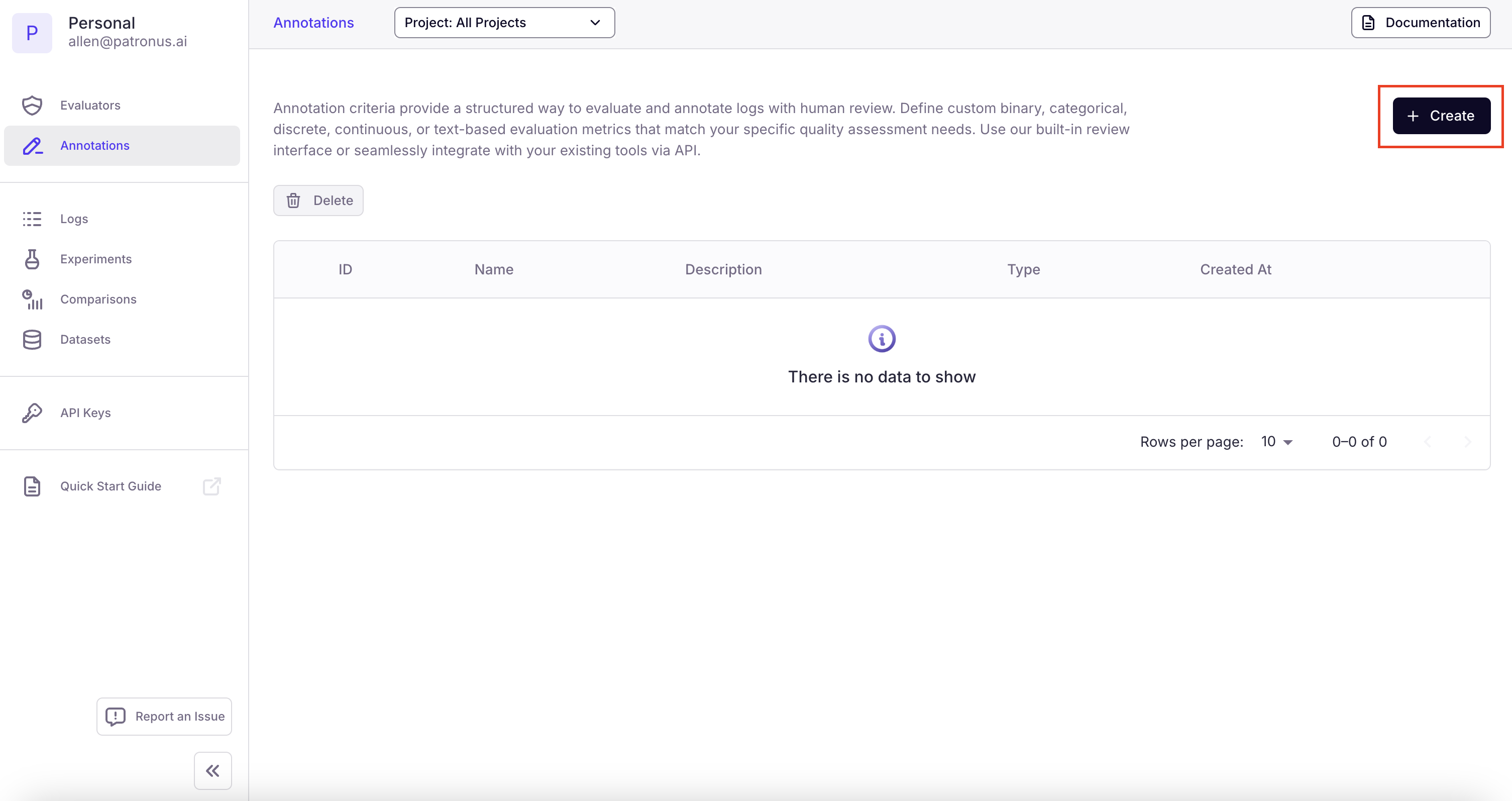

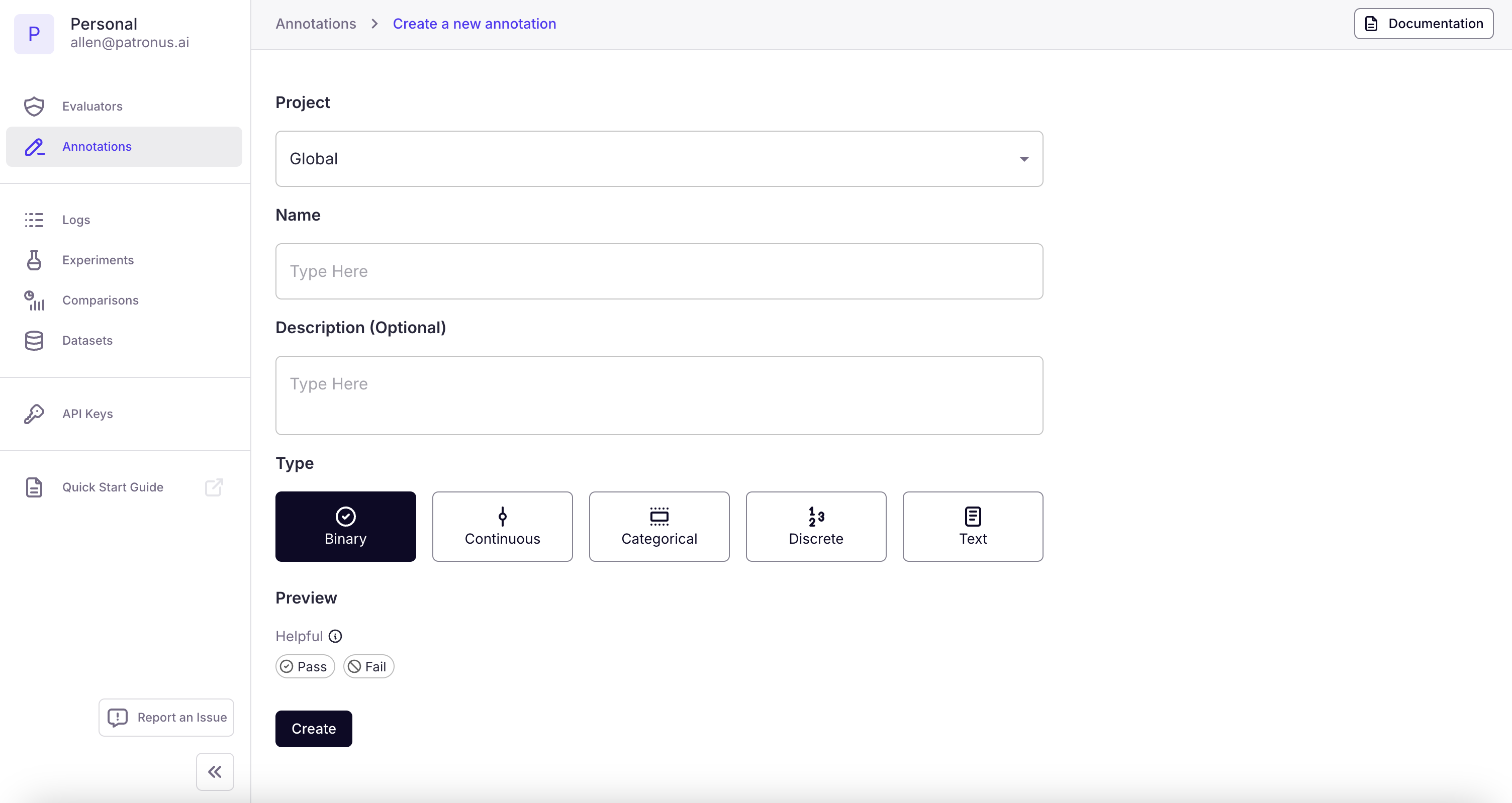

Create Annotation Criteria through the Annotations Tab

Navigate to the Annotations tab.

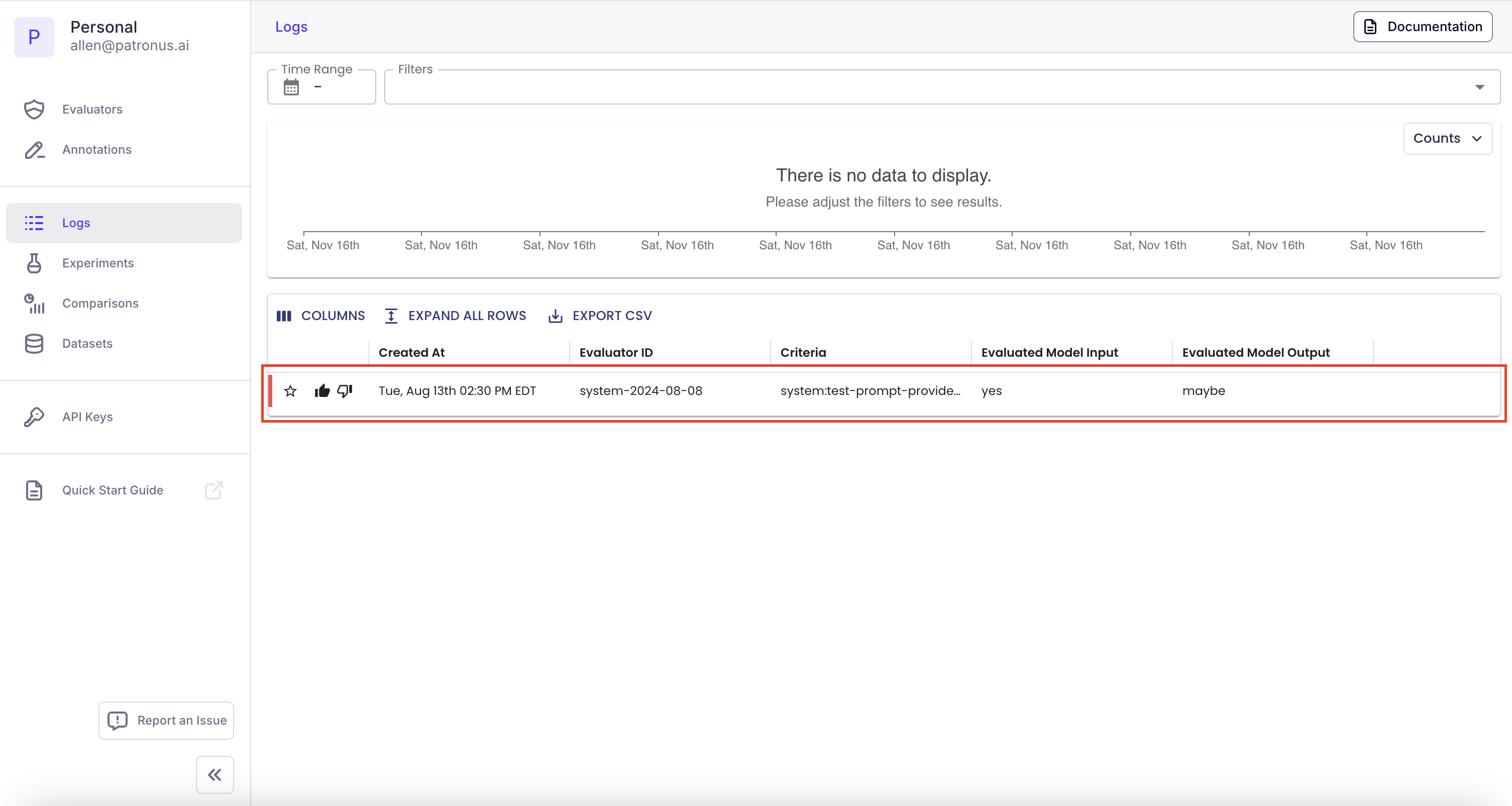

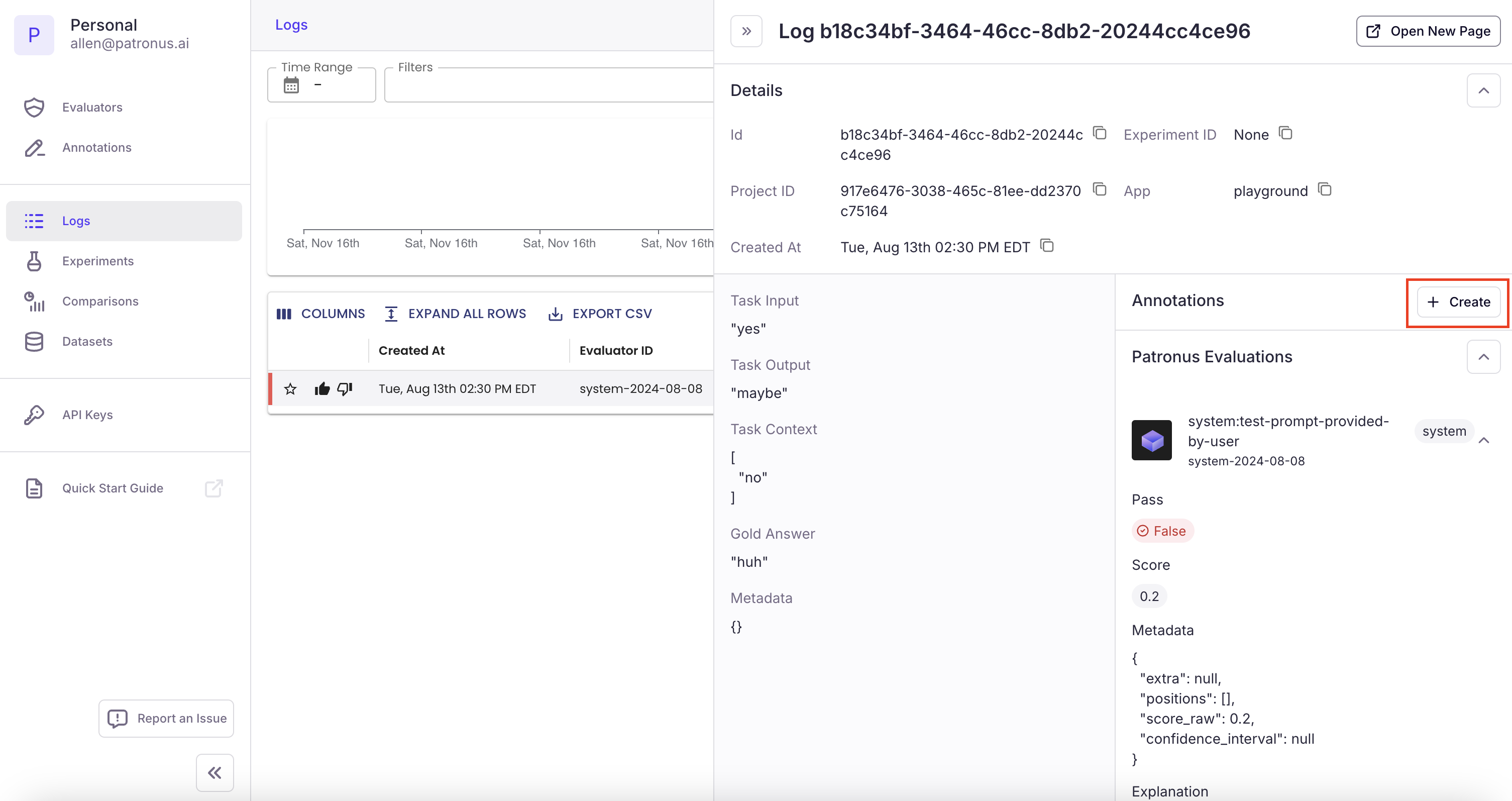

Create Annotation Criteria from the Logs tab

Note 📝:The project of the log where you create a human annotation from will be passed along and pre-populate your selected project field!

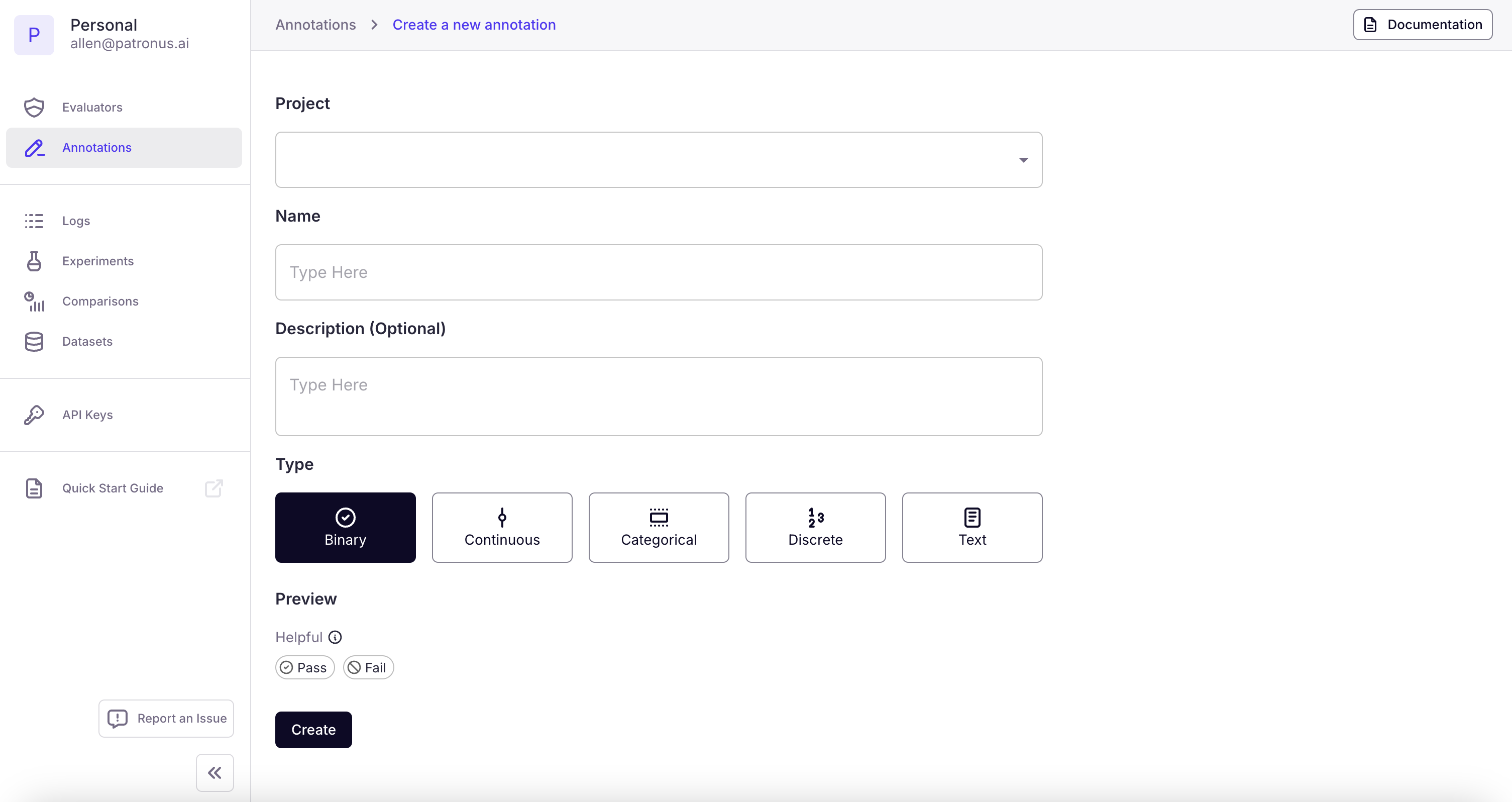

Human Annotation Criteria Fields

| Human Annotation Criteria Field | Required |

|---|---|

| Project | ✅ |

| Name | ✅ |

| Description | ❌ |

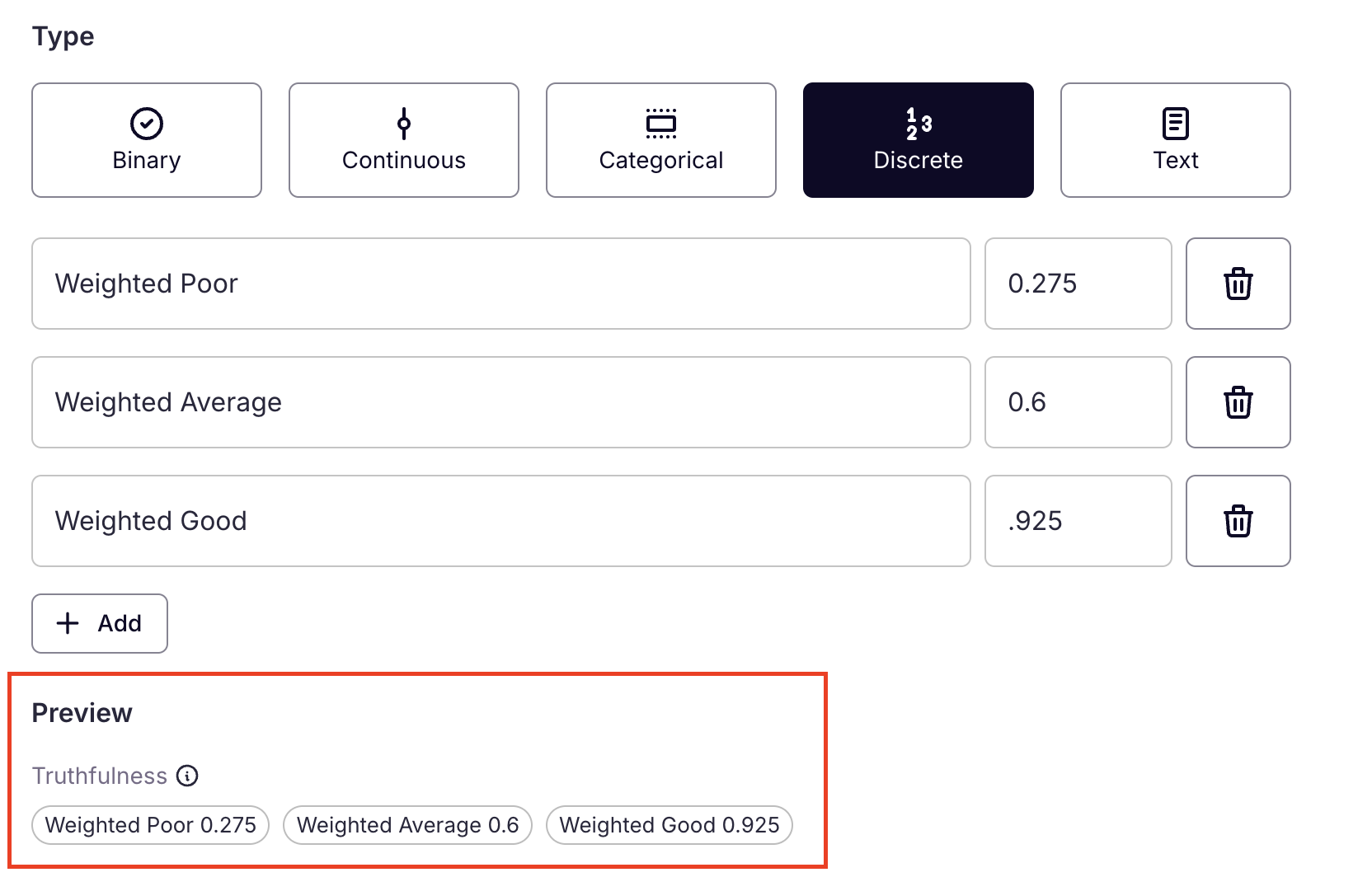

| 2+ Categories | ✅ (only for "Categorical" and "Discrete") |

Tip 👀:Use the "Preview" section at the bottom to see how the human annotation criteria would look on the logs page!

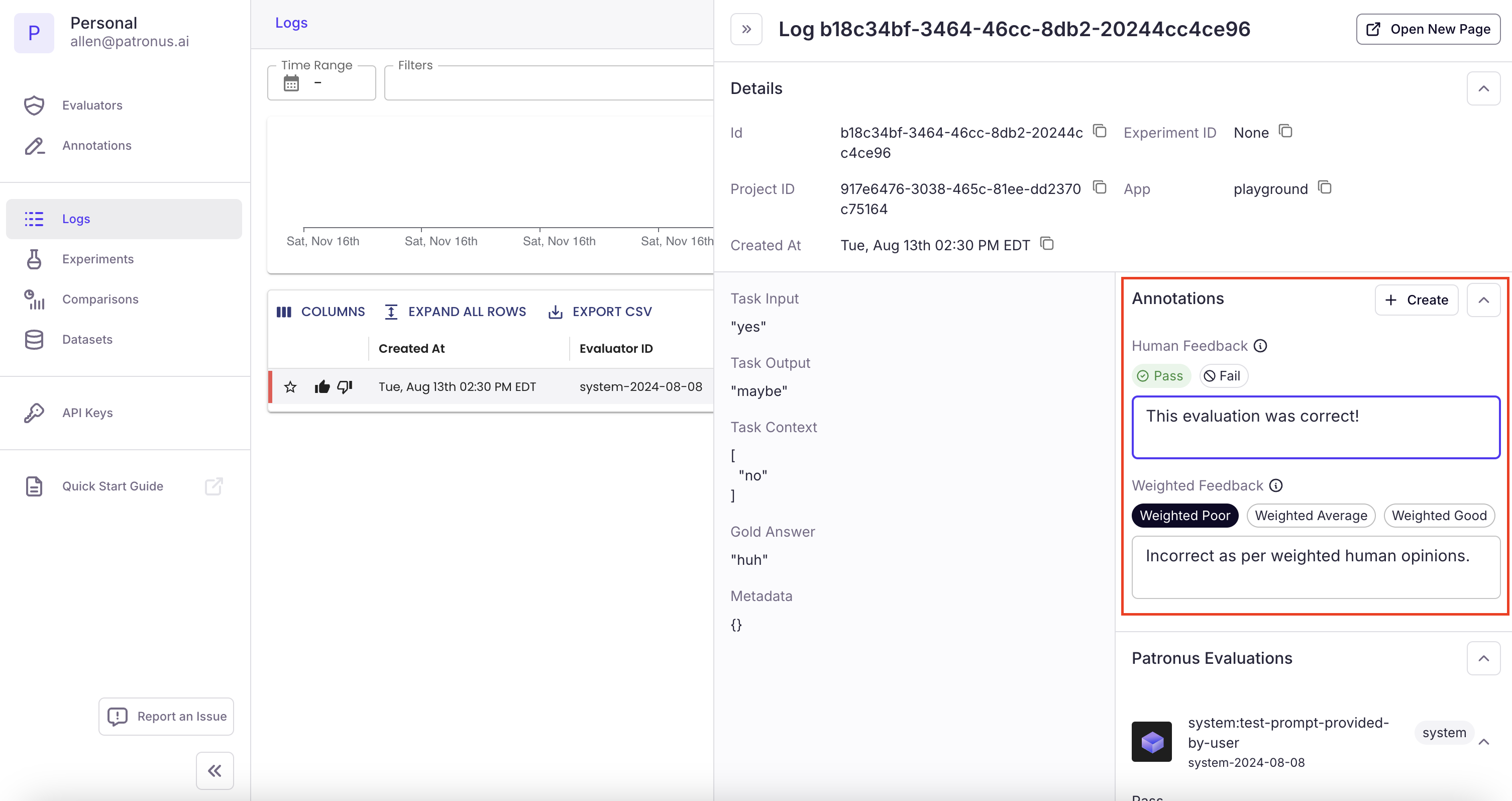

Start Adding Human Annotations

Now to finally start adding human annotations, navigate to the "Logs" tab and select a log. On the right side you should see a section titled "Annotations" that you can use to provide human annotations.

Updated 2 days ago