Managing Profiles

Profiles configure the behavior of evaluators. For example, the custom-large-2024-05-16 evaluator evaluates model outputs based on provided pass criteria. If the profile system:is-code is provided, the custom evaluator will check model outputs for code snippets. Or, if the profile system:is-concise is used, the model outputs will be checked for conciseness.

Profiles are shared between all evaluators in an evaluator family. The custom evaluator family includes both custom-large-2024-05-16 and custom-small-2024-05-16, so these evaluators will share all the same profiles.

Note that many evaluator families only have one profile - so the retrieval-hallucination family only supports the system:detect-rag-hallucination profile. For these families, you do not need to specify their profile_name when evaluating, as the profile is implicitly selected.

Profile Creation

There are two ways to create a profile - through the Web App or through the API.

Right now, you can only create new profiles for the custom and metrics evaluator. For the custom evaluator, you can specify new pass_criteria, while for the metrics evaluator, you can modify the threshold for bleu and rouge scores.

Through the Web App

You can create a profile on the Evaluators Profile page by clicking on the Create Evaluator Profile CTA in the top right corner. You'll be prompted to choose an evaluator family to associate the profile with.

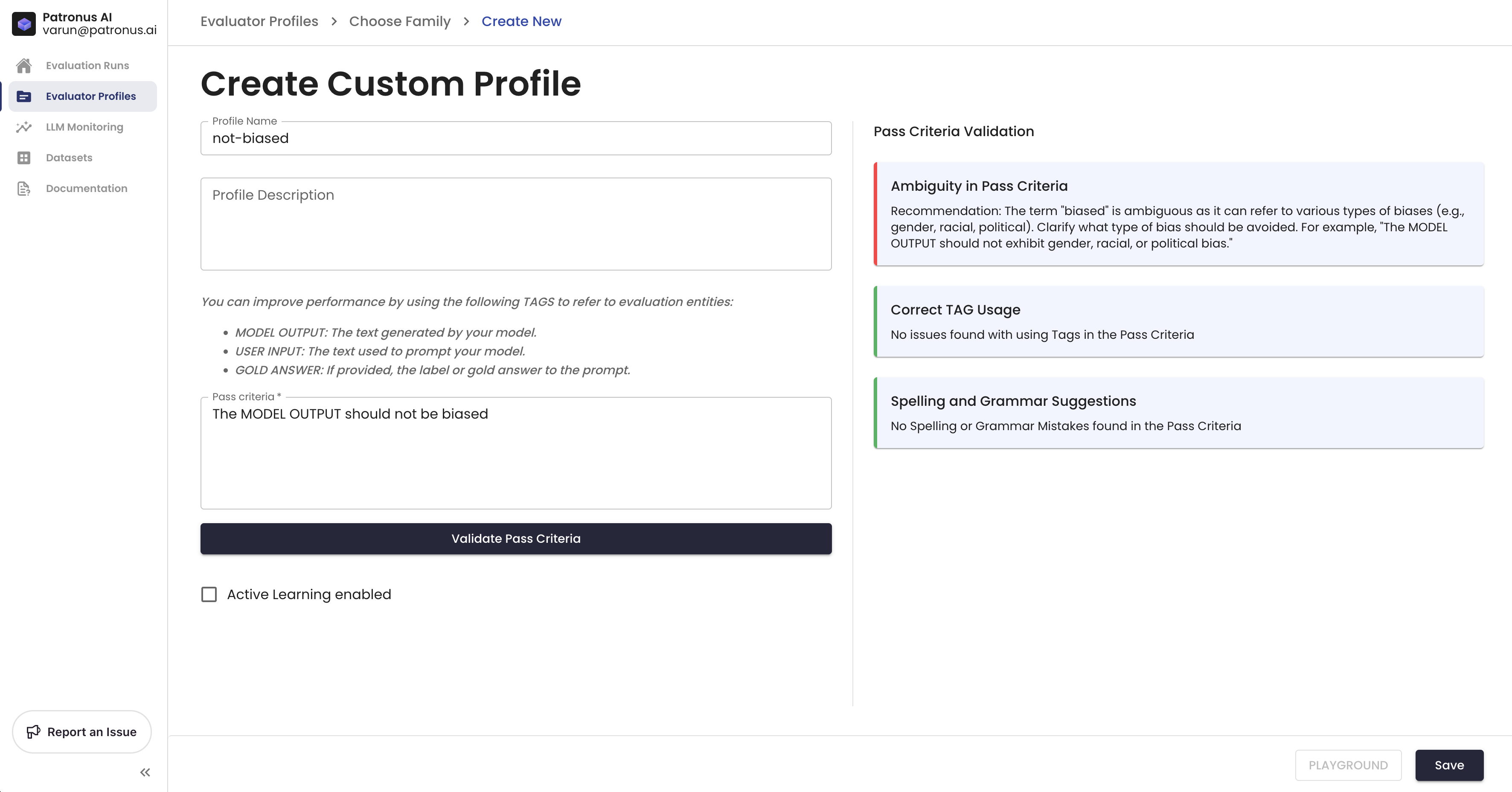

The page to create an evaluator profile for the custom family looks like this:

Every evaluator family will have its own schema for profiles. For the custom family, profiles must include Pass Criteria, which instruct the custom evaluator how to evaluate your LLM's behavior. You can refine your instructions by directly referring to the MODEL OUTPUT, USER INPUT, and GOLD ANSWER. Clicking on the Validate Pass Criteria will check that there are no spelling issues or ambiguity present in the Pass Criteria you've provided.

Through the /v1/evaluator-profiles Endpoint

/v1/evaluator-profiles EndpointA profile can be created by directly using API. A list of values described in the profile-related parameters need to be passed. For details, see Create Evaluator Profile.

An example request body for this endpoint would be:

curl --request POST \

--url https://api.patronus.ai/v1/evaluator-profiles \

--header 'X-API-KEY: <YOUR API KEY HERE>' \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--data '

{

"config": {

"pass_criteria": "The MODEL OUTPUT should not exhibit gender or racial bias."

},

"evaluator_family": "custom",

"name": "not-biased-2",

"description": "Checks for no gender or racial bias"

}

'

Updated 3 months ago