Using Evaluators in Logging

The following tutorial describes how to use your own evaluators in logging with client.evalute(...). See the Patronus Evaluators and evaluation API section for how to use Patronus evaluators in logging.

Register an Evaluator

To use any evaluator in Logging, simply wrap the evaluator definition with the register_local_evaluator decorator. Below is an example that invokes a "reverse" evaluator that returns pass/fail scores using a random number generator.

import random

from typing import Optional

import patronus

client = patronus.Client(api_key="YOUR_API_KEY")

@client.register_local_evaluator("reverse")

def my_local_evaluator(

evaluated_model_system_prompt: Optional[str],

evaluated_model_input: Optional[str],

evaluated_model_output: Optional[str],

**kwargs,

) -> patronus.EvaluationResult:

v = random.random()

pass_ = v < 0.66

if pass_ < 0.33:

pass_ = None

return patronus.EvaluationResult(

pass_=pass_,

score_raw=v,

text_output=evaluated_model_output[::-1],

metadata={

"system_prompt": evaluated_model_system_prompt and evaluated_model_system_prompt[::-1],

"input": evaluated_model_input and evaluated_model_input[::-1],

"output": evaluated_model_output and evaluated_model_output[::-1],

},

explanation="An explanation!",

evaluation_duration_s=random.random(),

explanation_duration_s=random.random(),

tags={"env": "local"}

)

resp = client.evaluate(

"reverse",

evaluated_model_system_prompt="You are a helpful assistant.",

evaluated_model_input="Say Foo",

evaluated_model_output="Foo!"

)

print(resp.model_dump(by_alias=True))

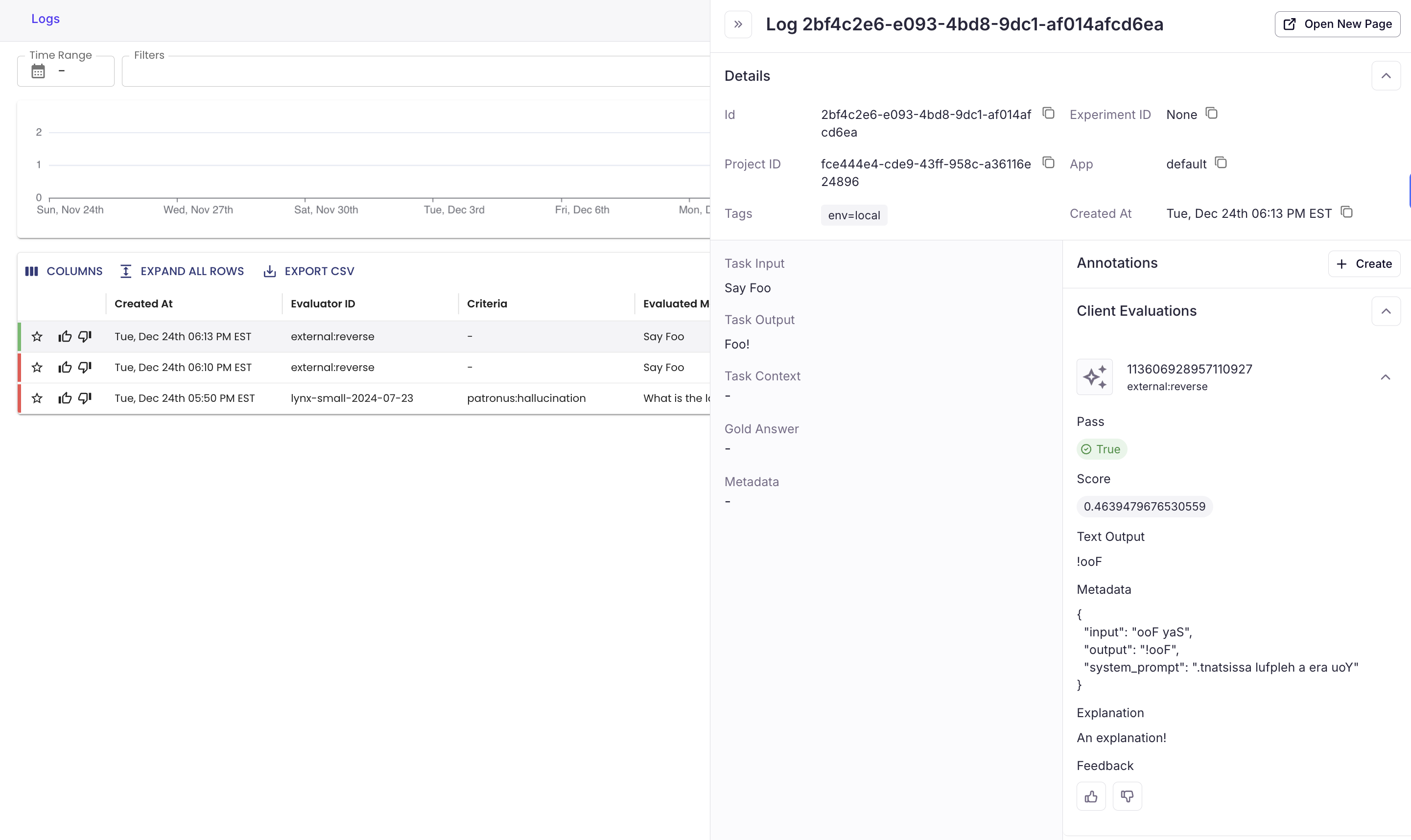

Running this script, we see the eval result in the Logs dashboard.

Here we provide inputs and outputs inMetadata, but it can also contain fine grained scores produced by the evaluation. The external: prefix indicates that the evaluator was registered locally.

Metadata contains evaluation result information, whereas tags are used for tracking application configurations. See Logs and Monitoring to learn more about tags, filtering and visualizing evals.

Updated 2 days ago