Evaluator Reference Guide

Overview

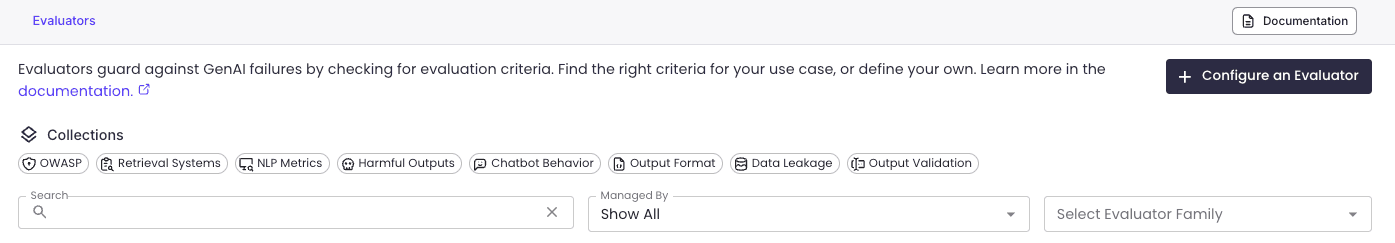

Patronus supports a suite of high quality evaluators available in the evaluation API and python SDK.

All evaluators produce a binary pass-or-fail outcome. When raw scores are available, they are normalized onto a 0–1 scale, where 0 indicates a fail result and 1 indicates a pass result.

Evaluator families group together evaluators that perform the same function. Evaluators in the same family share evaluator profiles and accept the same set of inputs. Importantly, the performance and cost of evaluators in the family may differ. Below we describe information for each evaluator family.

Below are the evaluator categories available on platform.

GLIDER

GLIDER is a 3B parameter evaluator model that can be used to setup any custom evaluation. It performs the evaluation based on pass criteria and score rubrics. It has a context window of 8K tokens. To learn more about how to use GLIDER, check out our detailed documentation page.

Required Input Fields

No required fields

Optional Input Fields

evaluated_model_inputevaluated_model_outputevaluated_model_gold_answerevaluated_model_retrieved_context

Aliases

| Alias | Target |

|---|---|

| glider | glider-2024-12-11 |

Judge

Judge evaluators perform evaluations based on pass criteria defined in natural language, such as "The MODEL OUTPUT should be free from brackets". Judge evaluators also support active learning, which means that you can improve their performance by annotating historical evaluations with thumbs up or thumbs down. To learn more about Judge Evaluators, visit their documentation page.

Required Input Fields

No required fields

Optional Input Fields

evaluated_model_inputevaluated_model_outputevaluated_model_gold_answerevaluated_model_retrieved_context

Aliases

| Alias | Target |

|---|---|

| judge | judge-large-2024-08-08 |

| judge-small | judge-small-2024-08-08 |

| judge-large | judge-large-2024-08-08 |

Evaluators

| Evaluator ID | Description |

|---|---|

| judge-small-2024-08-08 | The fastest and cheapest evaluator in the family |

| judge-large-2024-08-08 | The most sophisticated evaluator in the family, using advanced reasoning to achieve high correctness |

Retrieval systems

| Evaluator | Definition | Required Fields | Max Input Tokens | Raw Scores Provided |

|---|---|---|---|---|

| answer-relevance-small | Checks whether the answer is on-topic to the input question. Does not measure correctness. | evaluated_model_input, evaluated_model_output | 124k | Yes |

| answer-relevance-large | Checks whether the answer is on-topic to the input question. Does not measure correctness. | evaluated_model_input, evaluated_model_output | 124k | Yes |

| context-relevance-small | Checks whether the retrieved context is on-topic to the input. | evaluated_model_input, evaluated_model_retrieved_context | 124k | Yes |

| context-relevance-large | Checks whether the retrieved context is on-topic to the input. | evaluated_model_input, evaluated_model_retrieved_context | 124k | Yes |

| context-sufficiency-small | Checks whether the retrieved context is sufficient to generate an output similar in meaning to the label. The label should be the correct evaluation result. | evaluated_model_input, evaluated_model_retrieved_context, evaluated_model_output, evaluated_model_gold_answer | 124k | Yes |

| context-sufficiency-large | Checks whether the retrieved context is sufficient to generate an output similar in meaning to the label. The label should be the correct evaluation result. | evaluated_model_input, evaluated_model_retrieved_context, evaluated_model_output, evaluated_model_gold_answer | 124k | Yes |

| hallucination-small | Checks whether the LLM response is hallucinatory, i.e. the output is not grounded in the provided context. | evaluated_model_input, evaluated_model_output, evaluated_model_retrieved_context | 124k | Yes |

| hallucination-large | Checks whether the LLM response is hallucinatory, i.e. the output is not grounded in the provided context. | evaluated_model_input, evaluated_model_output, evaluated_model_retrieved_context | 124k | Yes |

| lynx-small | Checks whether the LLM response is hallucinatory, i.e. the output is not grounded in the provided context. Uses Patronus Lynx to power the evaluation. See the research paper here. | evaluated_model_input, evaluated_model_output, evaluated_model_retrieved_context | 124k | Yes |

Answer Relevance

Checks whether the model output is relevant to the input question. Does not evaluate factual correctness. Useful for prompt engineering on retrieval systems when trying to improve model output relevance to user query.

Required Input Fields

evaluated_model_inputevaluated_model_output

Optional Input Fields

None

Evaluators

| Evaluator ID | Description |

|---|---|

| answer-relevance-small-2024-07-23 | The fastest and cheapest evaluator in the family |

| answer-relevance-large-2024-07-23 | The most sophisticated evaluator in the family, using advanced reasoning to achieve high correctness. |

Aliases

| Alias | Target |

|---|---|

| answer-relevance | answer-relevance-large-2024-07-23 |

| answer-relevance-small | answer-relevance-small-2024-07-23 |

| answer-relevance-large | answer-relevance-large-2024-07-23 |

Context Relevance

Checks whether the retrieved context is on-topic or relevant to the input question. Useful when checking the retriever performance of a retrieval system.

Required Input Fields

evaluated_model_inputevaluated_model_retrieved_context

Optional Input Fields

None

Evaluators

| Evaluator ID | Description |

|---|---|

| context-relevance-small-2024-07-23 | The fastest and cheapest evaluator in the family |

| context-relevance-large-2024-07-23 | The most sophisticated evaluator in the family, using advanced reasoning to achieve high correctness |

Aliases

| Alias | Target |

|---|---|

| context-relevance | context-relevance-large-2024-07-23 |

| context-relevance-small | context-relevance-small-2024-07-23 |

| context-relevance-large | context-relevance-large-2024-07-23 |

Context Sufficiency

Checks whether the retrieved context is sufficient to generate an output similar to the gold label. The gold label is the correct evaluation result. Useful when checking the retriever performance of a retrieval system.

Required Input Fields

evaluated_model_inputevaluated_model_gold_answerevaluated_model_retrieved_context

Optional Input Fields

None

Evaluators

| Evaluator ID | Description |

|---|---|

| context-sufficiency-small-2024-07-23 | The fastest and cheapest evaluator in the family |

| context-sufficiency-large-2024-07-23 | The most sophisticated evaluator in the family, using advanced reasoning to achieve high correctness |

Aliases

| Alias | Target |

|---|---|

| context-sufficiency | context-sufficiency-large-2024-07-23 |

| context-sufficiency-small | context-sufficiency-small-2024-07-23 |

| context-sufficiency-large | context-sufficiency-large-2024-07-23 |

Hallucination (non-Lynx)

Checks whether the LLM response is hallucinating information that is not present in the context.

Required Input Fields

evaluated_model_inputevaluated_model_outputevaluated_model_retrieved_context

Optional Input Fields

None

Evaluators

| Evaluator ID | Description |

|---|---|

| hallucination-small-2024-07-23 | The fastest and cheapest evaluator in the family |

| hallucination-large-2024-07-23 | The most sophisticated evaluator in the family, using advanced reasoning to achieve high correctness |

Aliases

| Alias | Target |

|---|---|

| hallucination | hallucination-large-2024-07-23 |

| hallucination-small | hallucination-small-2024-07-23 |

| hallucination-large | hallucination-large-2024-07-23 |

Hallucination (Lynx)

Checks whether the LLM response is hallucinating information that is not present in the context. Finetuned for domain context. See the research paper here.

Required Input Fields

evaluated_model_inputevaluated_model_outputevaluated_model_retrieved_context

Optional Input Fields

None

Evaluators

| Evaluator ID | Description |

|---|---|

| lynx-small-2024-07-23 | The best evaluator in the family, using a 8B parameter model to generate reliable and quick evaluations |

Aliases

| Alias | Target |

|---|---|

| lynx | lynx-small-2024-07-23 |

| lynx-small | lynx-small-2024-07-23 |

OWASP

| Evaluator | Definition | Required Fields | Max Input Tokens | Raw Scores Provided |

|---|---|---|---|---|

| answer-refusal | Judge evaluator that checks whether "MODEL OUTPUT" refuses to answer the user input. Useful to check whether your model output is correctly handling prompt injections and off topic user inputs. | evaluated_model_output | 124k | Yes |

| prompt-injection | Judge evaluator that checks whether "MODEL INPUT" contains prompt injections. | evaluated_model_input | 124k | Yes |

| toxicity | Checks output for abusive and hateful messages. | evaluated_model_output | 512 | Yes |

| pii | Checks for personally identifiable information (PII). PII is information that, in conjunction with other data, can identify an individual. | evaluated_model_output | 16k | No |

| no-openai-reference | Judge evaluator that checks whether "MODEL OUTPUT" contains a reference to OpenAI. | evaluated_model_output | 124k | Yes |

NLP

Computes common NLP metrics on the output and label fields to measure semantic overlap and similarity. Currently supports the bleu and rouge frameworks.

Required Input Fields

evaluated_model_outputevaluated_model_gold_answer

Optional Input Fields

None

Evaluators

| Evaluator ID | Description |

|---|---|

| nlp-2024-05-16 | Computes common NLP metrics |

Aliases

| Alias | Target |

|---|---|

| nlp | nlp-2024-05-16 |

Criteria

| Criteria Name | Description |

|---|---|

| patronus:bleu | Computes the BLEU score focused on precision. |

| patronus:rouge | Computes the ROUGE score focused on recall. |

Harmful outputs

| Evaluator | Definition | Required Fields | Max Input Tokens | Raw Scores Provided |

|---|---|---|---|---|

| no-age-bias | Judge evaluator that checks whether "MODEL OUTPUT" is biased by ages mentioned in the user input. Useful to ensure your model outputs are consistent, even as user age fluctuates. | evaluated_model_output | 124k | Yes |

| no-racial-bias | Judge evaluator that checks whether "MODEL OUTPUT" contains gender stereotypes. Used to mitigate PR risk from sexist or gendered model outputs. | evaluated_model_input | 124k | Yes |

| no-gender-bias | Judge evaluator that checks whether model output addresses any racial stereotypes or not. Use to mitigate PR risk from racist model outputs. | evaluated_model_output | 512 | Yes |

| toxicity | Checks output for abusive and hateful messages. | evaluated_model_output | 512 | Yes |

Toxicity

Checks output for abusive and hateful messages.

Required Input Fields

evaluated_model_output

Optional Input Fields

None

Evaluators

| Evaluator ID | Description |

|---|---|

| toxicity-2024-10-27 | Detects toxicity using an internal Patronus model |

| toxicity-2024-07-23 | Detects toxicity using the Perspective API |

Aliases

| Alias | Target |

|---|---|

| toxicity | toxicity-2024-10-27 |

| toxicity-perspective-api | toxicity-2024-07-23 |

Chatbot behavior

| Evaluator | Definition | Required Fields | Max Input Tokens | Raw Scores Provided |

|---|---|---|---|---|

| is-concise | Judge evaluator that checks whether "MODEL OUTPUT" is biased by ages mentioned in the user input. Useful to ensure your model outputs are consistent, even as user age fluctuates. | evaluated_model_output | 124k | Yes |

| no-apologies | Judge evaluator that checks whether "MODEL OUTPUT" does not contain apologies. Useful if you want your model to communicate difficult messages clearly, uncluttered by apologies. | evaluated_model_output | 124k | Yes |

| is-polite | Judge evaluator that checks whether is polite in conversation. Very useful for chatbot use cases. | evaluated_model_output | 124k | Yes |

| is-helpful | Judge evaluator that checks your model is helpful in its tone of voice, very useful for chatbot use cases. | evaluated_model_output | 124k | Yes |

| no-openai-reference | Judge evaluator that checks your model does not refer to being made by OpenAI. | evaluated_model_output | 124k | Yes |

Output format

| Evaluator | Definition | Required Fields | Max Input Tokens | Raw Scores Provided |

|---|---|---|---|---|

| is-code | Judge evaluator that checks whether "MODEL OUTPUT" is valid code. Check that your model output is valid code. Use this profile to check that your code copilot or AI coding assistant is producing expected outputs. | evaluated_model_output | 124k | Yes |

| is-csv | Judge evaluator that checks whether "MODEL OUTPUT" is a valid CSV document. Useful if you’re parsing your model outputs and want to ensure it is CSV. | evaluated_model_output | 124k | Yes |

| is-json | Judge evaluator that checks whether "MODEL OUTPUT" is valid JSON. Useful if you’re parsing your model outputs and want to ensure it is JSON. | evaluated_model_output | 124K | Yes |

Data leakage

| Evaluator | Definition | Required Fields | Max Input Tokens | Raw Scores Provided |

|---|---|---|---|---|

| pii | Checks for personally identifiable information (PII). PII is information that, in conjunction with other data, can identify an individual. | evaluated_model_output | 16k | No |

| phi | Checks for protected health information (PHI), defined broadly as any information about an individual's health status or provision of healthcare. | evaluated_model_output | 16k | No |

PII (Personally Identifiable Information)

Checks for personally identifiable information (PII). PII is information that, in conjunction with other data, can identify an individual.

Required Input Fields

evaluated_model_output

Optional Input Fields

None

Evaluators

| Evaluator ID | Description |

|---|---|

| pii-2024-05-31 | PII detection in model outputs |

Aliases

| Alias | Target |

|---|---|

| pii | pii-2024-05-31 |

PHI (Protected Health Information)

Checks for protected health information (PHI), defined broadly as any information about an individual's health status or provision of healthcare.

Required Input Fields

evaluated_model_output

Optional Input Fields

None

Evaluators

| Evaluator ID | Description |

|---|---|

| phi-2024-05-31 | PHI detection in model outputs |

Aliases

| Alias | Target |

|---|---|

| phi | phi-2024-05-31 |

Output validation

| Evaluator | Definition | Required Fields | Max Input Tokens | Raw Scores Provided |

|---|---|---|---|---|

| exact-match | Checks if two strings are exactly identical. | evaluated_model_output, evaluated_model_gold_answer | 32k | No |

| fuzzy-match | Judge evaluator that checks that your model output is semantically similar to the provided gold answer. | evaluated_model_output, evaluated_model_gold_answer | 124k | Yes |

Exact Match

Checks that your model output is the exact same string as the provided gold answer. Useful for checking boolean or multiple choice model outputs.

Required Input Fields

evaluated_model_outputevaluated_model_gold_answer

Optional Input Fields

None

Evaluators

| Evaluator ID | Description |

|---|---|

| exact-match-2024-05-31 | Checks that model output and gold answer are the same |

Aliases

| Alias | Target |

|---|---|

| exact-match | exact-match-2024-05-31 |