Model Installation Steps

Now that you have the Patronus AI platform up and running in your environment, you can customize it with models and datasets! :fire:

Model Integrations

Many Patronus AI evaluators are powered by LLMs. In our self-hosted platform, users have the ability to configure the backbone LLMs to use as evaluation models.

We support the following kinds of model integrations:

- OpenAI: OpenAI model integrations query OpenAI with the specified model version. You can configure OpenAI API keys in the admin portal.

- HTTP: HTTP integrations allow you to query any custom LLM. This includes models hosted on your cluster, Azure OpenAI and externally hosted open source models. Popular HTTP integrations include:

- Azure OpenAI: Azure OpenAI integrations support any model deployed in your Azure account. You simply need to provide your Azure API key.

- Self hosted models: To self host models from Patronus AI, you need to ensure you have GPU resources to support the model you want to deploy. You will then configure the internal endpoint as a HTTP integration. See the Dockerized Models section below for more information.

Updating Model Integrations (For On-Prem Deployments)

For on-prem deployments we may have already created model integration templates for you.

- Verify that the model proxy API service is up and running with

kubectl get pods -n <your_namespace>. - Use port forwarding to expose the model proxy API service locally.

- Update the model integration ID.

You can now test the success of your integration by visiting the admin portal and clicking "test integration"!

Registering model integrations

To register a new model integration, you can run the following cURL request. Note: you can set up port-forwarding to the model proxy API and replace <model-proxy-api.internal.patronus.ai> with your localhost IP.

To update the model integration:

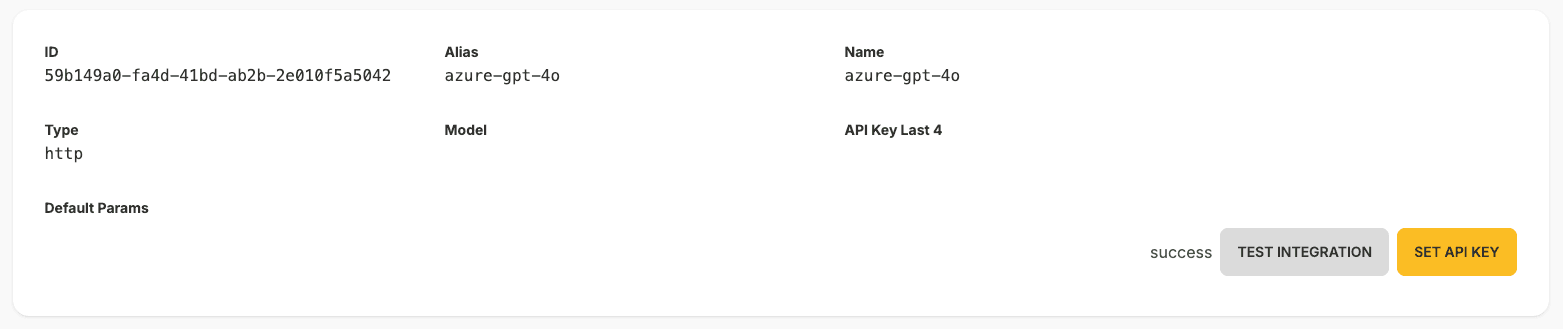

You can view your model integrations in the Admin Portal. To test the success of the model integration, click "test". You should see "Success" if the integration was successful.

Note: Currently you can set the API key for OpenAI models in the admin portal, but not HTTP integrations.

Dockerized Models

For users who wish to use open source models or models we have trained in house, we support dockerized model containers. In order to deploy a dockerized model, first ensure you have the following requirements.

Requirements

- Resource pool with a minimum of

80GBof GPU memory, and with an option to scale up using resource pool is provided. - Containers with LLM models use a base image of

nvidia/cuda:12.1.1-cudnn8-runtime-ubuntu22.04- this CUDA version has to be compatible with your cluster setup. - Containers with LLM models may require a dsn configuration within a cluster if a direct pod reference in the

model_proxydata does not work. This is because deployed LLM model services are accessed by one of the services (model_proxy) via HTTP protocol, and need to be accessible from within the cluster. Additionally, a database seed requires this information. Mapping example:mistralai-mistral-7b-v0-3- dsn:models-mistralai-mistral-7b-v0-3.models.internal.patronus.aipatronusai-lynx-8b-v1-1- dsn:models-patronusai-lynx-8b-v1-1.models.internal.patronus.ai

The Postgres database needs to be accessible from within the cluster - migration and metadata setup are done automatically with the provided script.